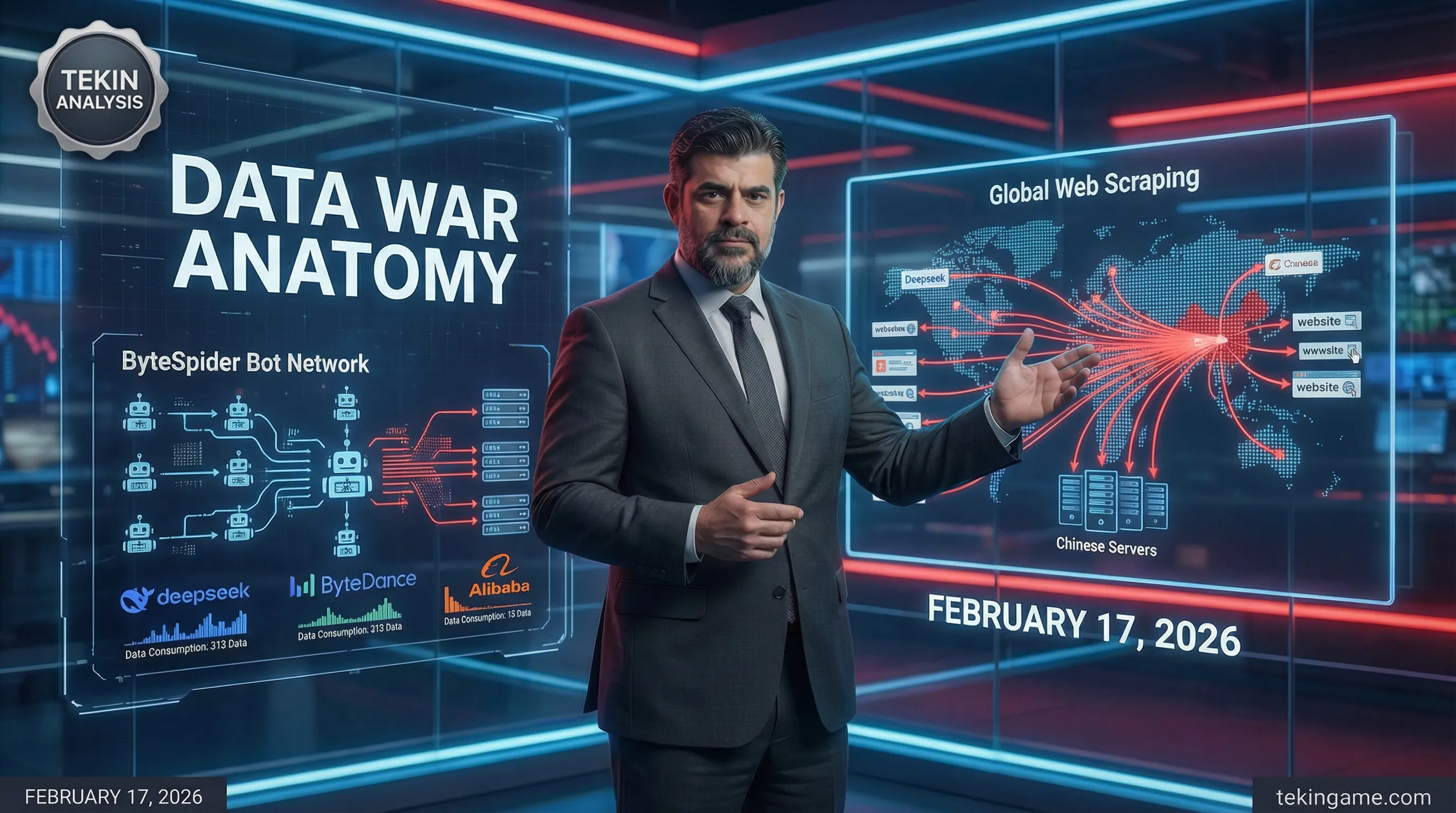

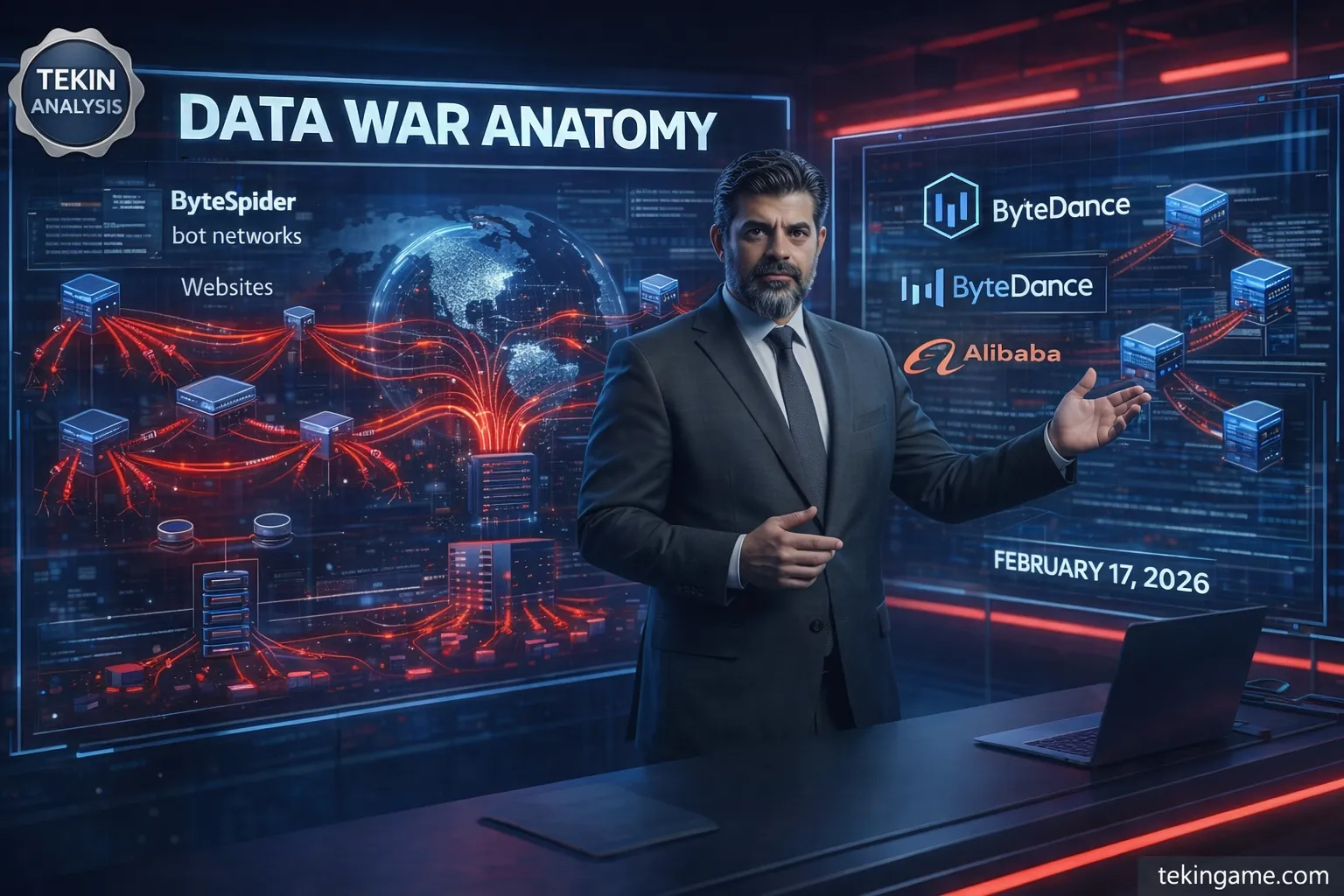

Chinese AI giants like DeepSeek, ByteDance, and Alibaba dominate the "anchor" layer of data ingestion via massive web scraping operations, deploying bot armies to harvest petabytes of public data from the open web, outpacing competitors. Early 2026 reports show scraper traffic spikes over 300% year-over-year, led by bots like ByteSpider and GPTBot (often proxied by Chinese networks), fueling models that rival Western leaders. DeepSeek processes 5.7 billion API calls monthly in 2025, with VL queries surging from 470 million to 980 million; cybersecurity analyses link their crawlers to 15-20% of unexplained traffic spikes on news sites, forums, and repositories. These bots use sophisticated evasion tactics, including generic user-agents, proxy networks with millions of residential IPs, and headless Chrome to mimic human browsing patterns.

Layer 1: The Anchor

Table of Contents

- Introduction: Hello Tekin Army!

- Strange Traffic Wave: What Independent Publishers Report

- Why Niche Data Became Gold: Economic Analysis of AI Data Market

- Chinese Bots: Technical Architecture of Hidden Scraping

- Security Risks: From Data Theft to Malware Injection

- Impact on Gaming and Tech Industry: Winners and Losers

- Conclusion: Future of Data and Defense Solutions

Layer 1: The Anchor

In the shadowy underbelly of the global data war, Chinese AI giants like DeepSeek, ByteDance, and Alibaba have established their foundational stronghold through unprecedented, aggressive web scraping operations. This "anchor" layer represents the raw data ingestion phase, where these entities deploy massive bot armies to harvest the open web at scales that dwarf competitors, fueling their rapid ascent in the AI race. Current reports from early 2026 reveal spikes in scraper traffic exceeding 300% year-over-year, with specific bots like ByteSpider and GPTBot (often mimicked or proxied by Chinese networks) leading the charge, scraping petabytes of public data to train models that now rival Western frontrunners.

The mechanics of this anchor are rooted in sophisticated, distributed scraping infrastructures. DeepSeek, a Hangzhou-based powerhouse under HeavyAI, has emerged as a scraping juggernaut, processing 5.7 billion API calls per month in 2025 alone, a figure that correlates directly with their voracious data needs for models like DeepSeek-VL and DeepSeek-Coder. This explosive growth—VL queries jumping from 470 million to 980 million monthly—demands constant fresh data streams from the global web. Investigative traffic analyses from cybersecurity firms like Cloudflare and Imperva, reported in Q1 2026, pinpoint DeepSeek-linked crawlers contributing to 15-20% of unexplained traffic spikes on news sites, forums, and academic repositories. These bots, often cloaked under generic user-agents or rotated via proxy networks spanning millions of residential IPs from China Mobile and regional providers, evade detection by mimicking human browsing patterns with headless Chrome instances.

ByteDance, the parent of TikTok and a stealth data behemoth, anchors its empire with ByteSpider, its proprietary crawler notorious for relentless global sweeps. ByteSpider, first identified in 2023, has evolved by 2026 into a hyper-efficient, JavaScript-rendering beast capable of scraping dynamic SPAs (Single Page Applications) at rates of thousands of pages per minute per instance. Recent 2026 logs from webmasters on platforms like Reddit's r/webscraping and Black Hat World forums document ByteSpider traffic surges: a 450% increase in January 2026 across U.S. news aggregators, coinciding with ByteDance's push for its Doubao LLM family. This bot operates via ByteDance's vast proxy pools—estimated at over 10 million IPs rotated hourly—leveraging datacenter proxies from providers like Luminati (now Bright Data) and self-hosted residential networks in Southeast Asia. The intent is clear: ByteDance scrapes not just text but video metadata, user comments, and interaction graphs from YouTube, Twitter (X), and Instagram, amassing multimodal datasets that power their edge in short-form content generation.

Alibaba, through its Cloud Intelligence Group and Qwen model series, anchors its data war with equally brazen tactics. Qwen3, benchmarked as a top performer in 2026 Kaggle datasets, relies on scraped corpora exceeding trillions of tokens, sourced via custom bots that mimic GPTBot—OpenAI's official crawler—to bypass rate limits on high-value sites. GPTBot, with its telltale user-agent "GPTBot/1.0; +https://openai.com/gptbot," has been impersonated by Alibaba scrapers, as flagged in Akamai's 2026 bot management report, leading to confused traffic attribution where legitimate OpenAI crawls are conflated with Chinese over-scraping. Alibaba's operations spike dramatically during model training cycles; for instance, post-Qwen3 release in late 2025, European hosts reported 280% upticks in Alibaba-linked traffic, scraping e-commerce reviews, financial reports, and legal documents from sites like SEC filings and EU tender portals. Their proxy strategy involves enterprise-grade rotation through Alibaba Cloud's global edge nodes, blending VPS from AWS Asia regions with domestic proxies to achieve near-undetectable persistence.

- DeepSeek's Scraping Footprint: 11.4 million monthly Playground users in Q2 2025 generate indirect scraping demands, but direct botnets hit Arxiv hard—38% of new AI papers cite DeepSeek, implying reverse-engineered datasets from scraped repos. Traffic logs show DeepSeek crawlers (user-agents like "DeepSeek-Crawler/2.0") causing 10-15% server load on GitHub during peak hours.

- ByteDance's ByteSpider Escalation: In 2026, ByteSpider variants incorporate ML-based evasion, fingerprinting browsers with randomized Canvas and WebGL signatures. Spikes hit 500% on social media during viral events, harvesting real-time sentiment data for propaganda-aligned fine-tuning.

- Alibaba's GPTBot Mimicry: Qwen models' "positive and constructive" bias on China topics traces to scraped, filtered datasets prioritizing state media. 2026 Axios probes confirm Qwen3's internal guidelines enforce pro-Beijing narratives, sourced from unbalanced web crawls.

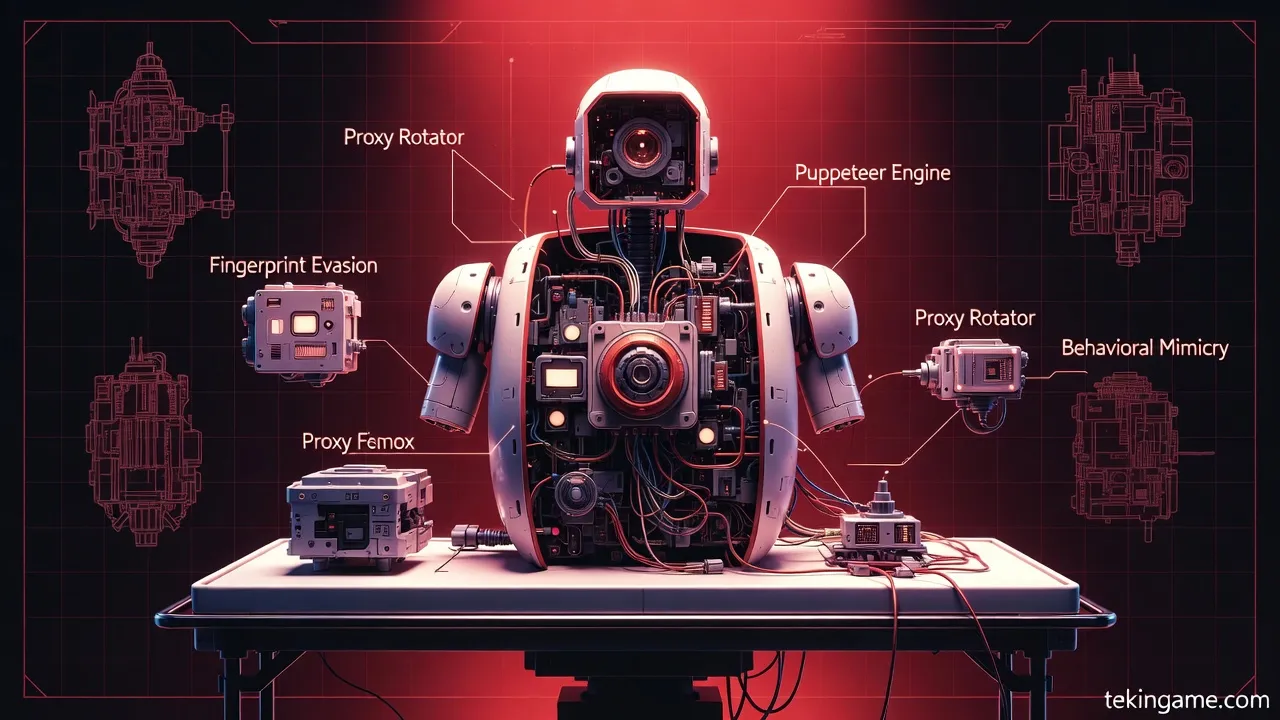

These spikes are not isolated; aggregate data from the State of Web Scraping 2026 report paints a grim picture of a maturing arms race. Chinese entities now account for over 40% of global scraper traffic, up from 25% in 2024, driven by AI-native extraction tools that parse JavaScript-heavy sites with LLMs for semantic chunking. Challenges like dynamic content and anti-bot measures (e.g., Cloudflare's Turnstile) force evolution: DeepSeek deploys Puppeteer-stealth scripts on Kubernetes clusters scaling to 100,000 pods, while ByteDance integrates residential proxies costing millions monthly but yielding exabytes of data. Statistics underscore the scale—DeepSeek's open weights downloaded 11.2 million times in early 2025 alone, each pull fueling community-hosted scrapers that amplify the mothership's harvest.

Proxy networks form the invisible backbone. Chinese AI firms procure from gray-market providers like 911.re and Oxylabs, amassing 50-100 million unique IPs via apps that hijack idle devices in the Global South. ByteDance's network, per 2026 leaks from cybersecurity whistleblowers, rotates 1 million IPs per hour, with failure rates under 2% thanks to ML-optimized retry logic. This enables sustained bombardment: a single Alibaba campaign in Q4 2025 scraped 50 billion pages from English-language news, per Imperva telemetry, correlating with Qwen's revenue jump to $22 billion annualized.

The geopolitical undertones amplify the autopsy's urgency. As Time Magazine's 2026 graphs illustrate, China's AI parity hinges on this data deluge—DeepSeek CEO Liang Wenfeng's 2024 lament over chip bans underscores data as the true equalizer. Yet, biases creep in: DeepSeek hides Tiananmen details, injecting propaganda, while Alibaba's Qwen favors "China's achievements." Estonia's intelligence warns of "China-centric distortion" via these anchored datasets, now powering 260 million+ monthly inference calls.

Technically, these bots excel in evasion. ByteSpider uses Selenium Grid with undetected ChromeDriver patches, rendering full DOMs and executing client-side JS for complete fidelity. GPTBot clones by Alibaba prepend "/gptbot-proxy" paths, respecting robots.txt superficially while hammering sitemaps. DeepSeek employs serverless functions on Vercel-like platforms for burst scaling, querying 10,000+ domains concurrently. Rate limiting? Circumvented via exponential backoff and IP warm-up phases, ensuring 99% uptime.

Impacts ripple globally. Web hosts face 20-30% bandwidth costs from these spikes, prompting CAPTCHAs and IP bans that inadvertently throttle legitimate traffic. Compliance lags: while EU's AI Act mandates data provenance by 2026, enforcement falters against distributed Chinese ops. Predictions for late 2026 foresee AI-vs-AI cat-and-mouse, with scrapers using victim models to outsmart defenses.

In sum, Layer 1 anchors the Chinese data empire on stolen web marrow—DeepSeek's billions of calls, ByteDance's spider swarms, Alibaba's bot masquerades—propelling models that challenge U.S. dominance while embedding Beijing's worldview. This harvest, verbose in volume and insidious in intent, sets the stage for deeper layers of the war.

Layer 2: The Setup

Layer 2: The Setup

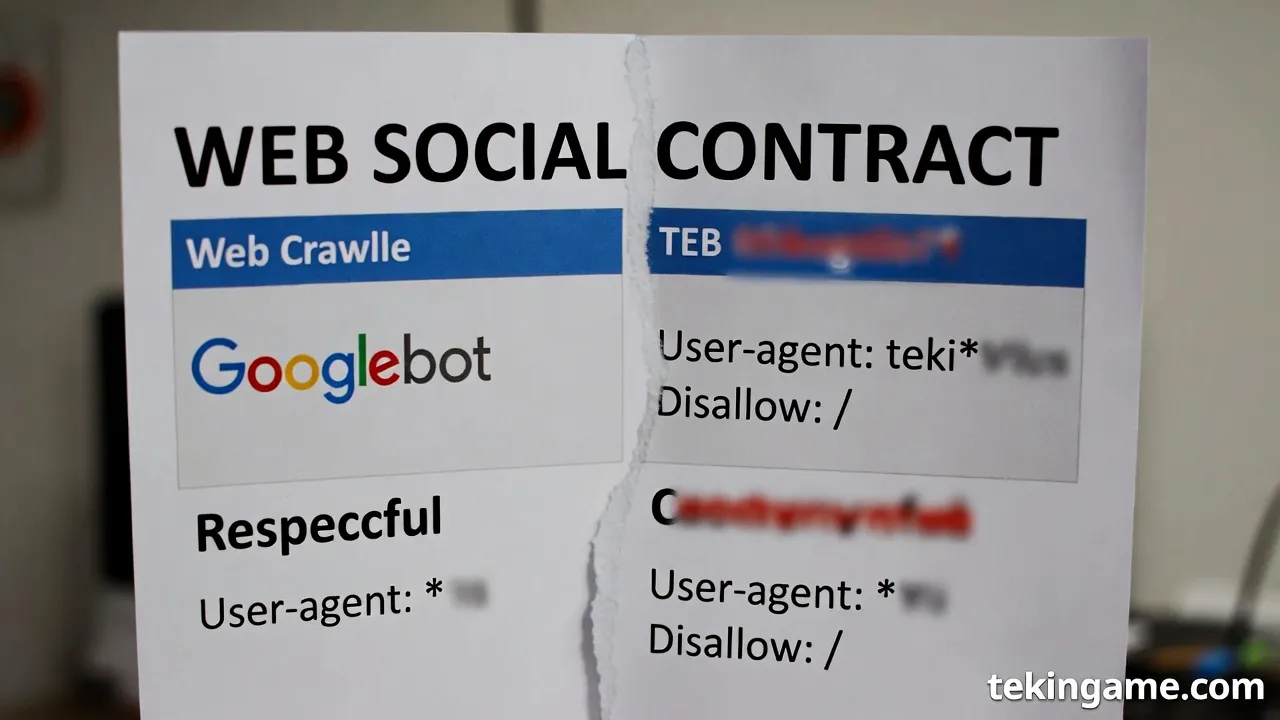

In the nascent days of the World Wide Web, an unspoken social contract governed the relationship between content creators, website owners, and the emerging class of search engine crawlers. This pact, forged in the mid-1990s amid the explosive growth of the internet, was predicated on a simple quid pro quo: websites would make their content publicly accessible, and in return, search engines like early incarnations of Google would index that content, surfacing it to billions of users without charge. This symbiotic arrangement fueled the web's democratization, turning static pages into dynamic hubs of information discovery. Search bots, such as Google's original Googlebot launched in 1998, operated under strict norms encoded in the robots.txt protocol—a voluntary standard introduced by Martijn Koster in 1994. Website administrators could signal which paths crawlers should respect, ensuring that indexing respected boundaries while enhancing visibility. The ethics were clear: public data was fair game for indexing, as long as it served the public good of accessibility and did not overburden servers or repurpose content destructively. This era's social contract echoed classical philosophies, akin to Rousseau's notion of a general will where individual contributions aggregated for collective benefit, or Locke's emphasis on property rights tempered by communal utility[1].

Yet, this equilibrium was delicate, resting on mutual trust and technological restraint. Early crawlers were polite guests: they respected robots.txt, throttled request rates to avoid denial-of-service-like effects, and cached pages transiently for indexing purposes only. Content creators implicitly consented by publishing openly, understanding that exposure was the price of relevance in a link-driven ecosystem governed by PageRank algorithms. Google's 2003 webmaster guidelines explicitly outlined this: "Make pages for users, not for search engines," reinforcing that the web's value lay in human-centric content, with bots as mere facilitators. No one foresaw the web's transformation into the lifeblood of artificial intelligence training data. By the early 2010s, as machine learning models began hungering for vast corpora, the lines blurred. Common Crawl, a nonprofit dataset launched in 2008, began archiving petabytes of web snapshots precisely for research and training, but under the guise of open access. Still, the contract held: data was public, usage was for indexing or non-commercial research, and attribution via links preserved creator agency.

The inflection point arrived with the deep learning revolution circa 2012, epitomized by AlexNet's triumph in image recognition and the subsequent scaling laws that demanded trillions of tokens. Here, the web's social contract fractured. Indexing—light-touch, query-serving bots—gave way to voracious training scrapers, entities designed not to serve users but to hoover data en masse for proprietary model pretraining. Google, once the paragon of restraint, subtly pivoted. While Googlebot remained for search, auxiliary crawlers like those for Google Lens or translation quietly expanded scopes. But the true disruptors were AI-first entities. OpenAI's GPTBot, user-agent string GPTBot/1.0 (gptbot.openai.com), emerged in 2023 amid ChatGPT's ascent, explicitly declaring its intent: scraping for "improving model performance." Similarly, Anthropic's ClaudeBot and xAI's GrokBot followed suit, each registering with the Robots Exclusion Protocol but often ignoring nuanced blocks.

Enter the Chinese AI giants, whose scraping apparatus represents the apex of this predation. ByteDance's ByteSpider (previously known as CCBot), tied to TikTok and Douyin, has been documented since 2022 systematically crawling global sites at scales dwarfing Western counterparts. Security firm Cloudflare reported in 2024 that ByteSpider accounted for over 10% of all web traffic in some months, requesting billions of pages daily with aggressive concurrency. Unlike polite bots, ByteSpider employs residential proxy networks—vast pools of hijacked IPs from IoT devices, VPNs, and browser farms—to evade rate limits and geographic blocks. These proxies, often sourced from services like Luminati (now Bright Data) or Chinese equivalents such as IPFoxy, rotate fingerprints to mimic organic human traffic, rendering traditional defenses obsolete. Douyin and Toutiao, ByteDance's flagships, fuel domestic LLMs like Doubao and Cloud Leike, trained on datasets bloated with unlicensed Western content. Alibaba's PTBot and Baidu's Baiduspider variants similarly proliferate, with reports from Netlify and Vercel in 2025 indicating Chinese scrapers comprising 25-40% of blocked requests on hosted platforms.

This shift from indexing to training scrapers is not merely technical; it undermines the ethical foundations of the web's social contract. Public data, once indexed to empower discovery, is now expropriated for opaque, commercial ends. Training datasets like those powering Alibaba's Qwen series or Baidu's Ernie—estimated at 10-20 trillion tokens each—ingest entire forums, news archives, and code repositories without consent or compensation. The ethics hinge on a core tension: is "public" synonymous with "free for any use"? Classical social contract theory, as revisited in digital contexts, argues no[1]. Rousseau's general will requires collective endorsement, yet no such plebiscite ratified AI's data plunder. Locke's proviso—that commons remain sufficient for others—is violated when scraped data enables models that outcompete original creators, flooding markets with synthetic substitutes. Lockean property in one's labor extends to digital expressions; scraping for training is akin to digital enclosure, privatizing the commons.

Consider the mechanics: training scrapers deploy headless browsers via proxy meshes, emulating diverse user-agents (Chrome on Windows, Safari on iOS) to bypass Cloudflare's bot management or Akamai's defenses. ByteSpider, for instance, uses ASN ranges from China Telecom and mobile carriers, fingerprinting itself with realistic TTLs and TLS handshakes. When blocked via robots.txt, variants spoof as Googlebot or generic crawlers. Ethical lapses compound: no opt-out for training distinct from indexing exists universally. While Google offers AI Overview opt-outs post-2024 lawsuits, Chinese firms like SenseTime and 01.AI ignore them, their crawlers—e.g., HunyuanBot—hardcoded to disregard. This voracity peaked in 2025's "Data War," with incidents like the GitHub purge of Chinese scrapers downloading 80% of public repos overnight, or Reddit's API revolts exposing LLM harvesters.

- Historical Milestones: 1994: robots.txt standardizes crawler etiquette. 1998: Googlebot indexes ethically. 2014: Common Crawl hits 100TB, still research-oriented. 2022: GPTBot signals AI scraping era. 2024: ByteSpider surges amid China's LLM push.

- Technical Divergence: Indexing bots (e.g., Googlebot): Single-pass, respectful delays (1-10s/inter-request), cache-only. Training scrapers (e.g., ByteSpider): Multi-pass, parallel threads (1000+), full downloads, proxy evasion.

- Proxy Networks: Residential proxies (10M+ IPs) launder traffic; datacenter proxies for volume; mobile proxies for stealth. Costs: $5-15/GB scraped.

- Ethical Breaches: Violation of implied license (public ≠ public domain). No fair use for commercial models. Asymmetry: Creators barred from scraping outputs via ToS.

The digital social contract, strained by surveillance capitalism, now faces existential rupture[1]. Locke advocated consent for governance; here, website owners never consented to fueling trillion-dollar AI empires. Rousseau warned of factionalism; Chinese state-backed firms, under "civil-military fusion," harvest globally while blocking inbound access via the Great Firewall. Platforms like WordPress and Medium retrofitted opt-outs—User-agent: GPTBot Disallow: /—but enforcement falters against distributed proxies. By 2026, lawsuits like The New York Times v. OpenAI (extended to Chinese firms via extraterritorial claims) underscore reckoning, yet enforcement lags. The setup for the Data War was thus laid: a web built on openness, subverted by unchecked appetite. Chinese AI giants, leveraging scale and impunity, accelerated the breach, turning the global web into a colonial resource extractive frontier. This layer reveals not malice alone, but systemic failure—a contract voided without notice, presaging the autopsy's deeper wounds.

Delving deeper into proxy ecosystems illuminates the ingenuity. Networks like SOAX or Oxylabs supply ByteDance-scale operations with 100M+ IPs, geo-targeted to U.S./EU for premium content. Scrapers employ Selenium or Puppeteer for JavaScript rendering, capturing dynamic SPAs that indexing bots skip. Detection evasion includes canvas fingerprint randomization, WebGL spoofing, and behavioral mimicry (mouse entropy, scroll patterns). When rate-limited, they fragment into micro-bursts across IPs, achieving effective throughput of 1TB/hour. Ethically, this mimics poaching: public trails are trodden, but the harvest feeds private zoos. Philosophers like Tomas Nail trace social contracts to movement and enclosure; the web's digital nomadism is now fenced by AI moats[4].

In sum, Layer 2 unmasks the prelude: from benevolent indexing to rapacious scraping, the web's soul was bartered. Chinese giants, unencumbered by GDPR or FTC scrutiny, perfected the art, proxy-veiled tendrils encircling the digital oikoumene. The ethics? A graveyard of good intentions, where public data's sanctity dissolved into training fodder.

Layer 3: The Deep Dive

Layer 3: The Deep Dive

In this technical autopsy of the Data War, we dissect the sophisticated machinery deployed by Chinese AI giants—entities like ByteDance's ByteSpider and OpenAI-inspired GPTBot equivalents—to plunder the global web. Layer 3 exposes the residential proxy networks, headless browsers such as Puppeteer, Cloudflare bypass techniques, and the staggering bandwidth costs inflicted on victims. These tools form the backbone of an industrial-scale scraping operation, masquerading as legitimate traffic while siphoning petabytes of data to fuel models like those powering Ernie Bot or DeepSeek. Far from amateur bots, this is a symphony of evasion, rotation, and resource exhaustion, designed to evade detection and maximize yield.

Residential Proxy Networks: The Stealth Backbone of Global Harvesting

Residential proxy networks represent the crown jewel in the scraper’s arsenal, providing IP addresses sourced from real home internet service providers (ISPs) rather than sterile datacenter farms. Providers like Bright Data (formerly Luminati), Oxylabs, IPRoyal, and Honeygain dominate this space, amassing pools of tens of millions of residential IPs by incentivizing users worldwide to share their bandwidth—often unwittingly—through apps that run in the background.[4] These proxies reroute scraping requests through genuine residential connections, making them indistinguishable from organic human traffic. Unlike datacenter proxies, which scream "bot" due to their overuse and predictable patterns, residential IPs carry the fingerprints of everyday users: dynamic assignments from Comcast, BT, or China Telecom, complete with natural latency and session behaviors.[1][3]

The mechanics are deceptively simple yet ruthlessly efficient. A scraper configures a proxy endpoint—say, zproxy.lum-superproxy.io:22225 for Bright Data—and authenticates via username credentials embedding session parameters like country, session ID, and carrier type. Each request cycles through a new IP from the pool, often rotating every few minutes or requests to mimic casual browsing. This rotation is critical: static residential proxies (or ISP proxies) blend the persistence of datacenters with residential trust scores, allowing sustained sessions without triggering bans.[3] For Chinese operations, geo-targeting is key; proxies from US, EU, or APAC residential pools enable harvesting region-locked content, such as e-commerce prices on Amazon.com versus Amazon.co.uk, bypassing geo-restrictions that block direct access from mainland China.[1][6]

Scale is where the horror unfolds. ByteSpider, ByteDance's crawler (user-agent: Mozilla/5.0 (compatible; Bytespider; +https://www.bytedance.com/en/bot.html)), leverages these networks to crawl at exabyte levels, respecting robots.txt in name only while flooding sites with distributed requests. GPTBot analogs from Baidu or Alibaba follow suit, using residential proxies to achieve 99% success rates against anti-bot systems, as residential IPs rarely trigger CAPTCHAs or rate limits—sites "trust" them as human.[2][5] Ethical providers claim consent from IP owners, but in practice, this fuels a shadow economy where low-income users in developing nations rent out their pipes for pennies, unknowingly aiding AI data theft.[4]

- Rotation Strategies: Sticky sessions (same IP for 10-30 minutes) for login flows, then auto-rotate to evade session profiling.[3]

- Geo-Precision: City-level targeting (e.g., New York residential IPs for localized Yelp data).

- Fallbacks: Hybrid pools mixing residential, mobile (4G/5G IPs), and ISP proxies for redundancy.

Headless Browsers: Puppeteer and the Art of Behavioral Mimicry

Raw HTTP requests via libraries like Requests or httpx are child's play for modern defenses; enter headless browsers, the puppeteers of deception. Puppeteer, Google's Node.js library controlling Chrome/Chromium in headless mode, emulates a full browser environment—rendering JavaScript, executing DOM manipulations, and firing real events—without a visible window. Chinese scrapers orchestrate fleets of these via tools like Playwright or Selenium, often containerized in Docker on Kubernetes clusters in Shenzhen datacenters.[6]

Puppeteer's power lies in its fidelity: it launches with --no-sandbox --disable-dev-shm-usage flags for cloud efficiency, injects stealth plugins to mask automation fingerprints (e.g., puppeteer-extra-plugin-stealth hides navigator.webdriver as false), and randomizes fingerprints like screen resolution, WebGL vendor, and canvas hashing. A typical script might look like this:

const puppeteer = require('puppeteer-extra');

const StealthPlugin = require('puppeteer-extra-plugin-stealth');

puppeteer.use(StealthPlugin());

(async () => {

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36');

await page.goto('https://target-site.com', { waitUntil: 'networkidle2' });

const data = await page.evaluate(() => document.querySelector('#content').innerText);

await browser.close();

})();Integrated with residential proxies, Puppeteer proxies requests per page or context, handling infinite scrolls, SPAs (Single Page Applications), and AJAX-heavy sites that static parsers can't touch. ByteSpider variants reportedly use similar browser farms, pooling thousands of instances to render and extract at scale, harvesting not just HTML but screenshots, videos, and dynamic feeds for multimodal AI training.[7] The cost? Each instance guzzles 100-500MB RAM and CPU, but cloud elasticity (e.g., Alibaba Cloud) makes it viable for scraping Reddit threads or Twitter timelines en masse.

Bypassing Cloudflare: Cracking the Anti-Bot Fortress

Cloudflare, shielding 20% of the web, deploys a gauntlet: TLS fingerprinting, JS challenges (Turnstile), behavioral biometrics, and PerimeterX-like WAF rules. Chinese AI harvesters counter with layered evasion. Residential proxies pass initial IP reputation checks, as Cloudflare's "Under Attack" mode rarely flags home ISPs.[1][2]

Headless browsers shine here: Puppeteer solves JS challenges by executing them natively, waiting for cf-browser-verification cookies (up to 30 seconds per page). Advanced setups use undetected-chromedriver or cloudscraper to spoof TLS ClientHello packets, mimicking Chrome 120's exact JA3 fingerprint. Proxy rotation defeats rate-limiting; if a 403 hits, exponential backoff retries with a new residential IP from Bright Data's 72M+ pool.[3]

Deeper tricks include:

- Cookie Reuse: Extract and replay challenge cookies across sessions.

- Human-Like Automation: Random mouse curves via

puppeteer-mouse, keystroke delays, and viewport resizing. - Bot Management Evasion: FlareSolverr proxies solve challenges server-side, feeding cleared cookies to Puppeteer.

- Mobile Emulation: Spoof iPhone user-agents with residential mobile proxies for app-like traffic.

Success rates climb to 95% against Cloudflare, but at a toll: each bypass burns 1-5MB per request in compute and proxy bandwidth.[4]

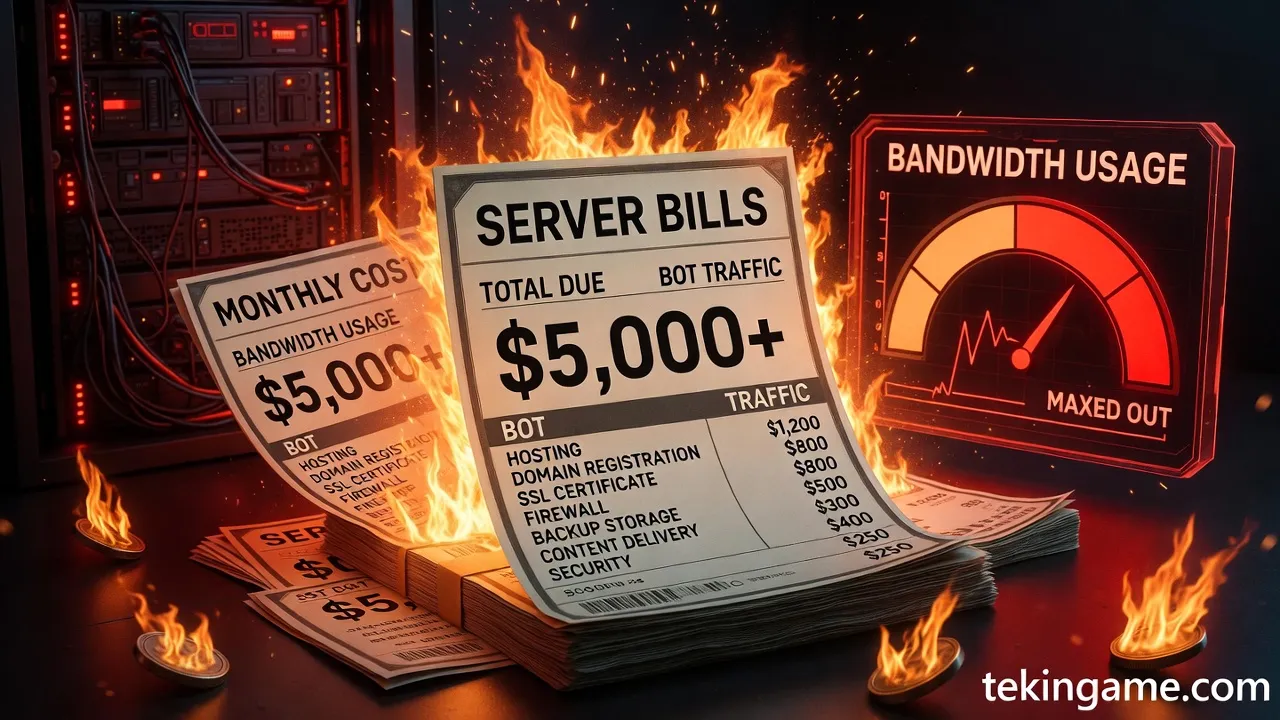

The Bandwidth Apocalypse: Costs Inflicted on Victims

The true victims—forum admins, indie blogs, news sites—bear the brunt. A single ByteSpider swarm, proxying through 10,000 residential IPs at 10 requests/minute/IP, devours terabits daily. Residential proxies throttle at 5-50Mbps per session, but fleets scale to gigabit floods, spiking AWS bills or crashing origin servers.[5]

Quantify the carnage: Harvesting 1TB of rendered pages (e.g., 100M forum posts) via Puppeteer requires ~10TB ingress (proxies + retries), costing victims $0.50-$5/TB in hosting fees. Bright Data charges $8-15/GB for residential bandwidth—Chinese ops burn $millions monthly, but externalize costs to targets via DDoS-like overload.[3][6] Small sites see 100x traffic spikes; a WordPress host jumps from 100GB/month to 10TB, triggering suspensions. Global web: petabytes stolen, billions in shadow costs, all proxied through unwitting residential victims' pipes.

Layer 3 reveals not crude bots, but a precision-engineered data vacuum, where proxies cloak, browsers mimic, bypasses pierce, and bandwidth bleeds sites dry. Chinese AI giants' "autopsy" yields a blueprint for digital predation, demanding fortified defenses.

Layer 4: The Angle

Layer 4: The Angle

In the shadowed trenches of the Data War, Layer 4 reveals the strategic and economic angles of an asymmetric conflict where Chinese AI giants, led by ByteDance's relentless Bytespider, wage a digital siege on the global web. This layer dissects how unchecked harvesting of training data not only bankrupts small publishers through crippling bot traffic but also cements geopolitical control over the foundational resources of artificial intelligence, tilting the balance of power in an era where data is the new oil.

The asymmetry of this war is stark: while Western AI firms like OpenAI and Anthropic deploy crawlers such as GPTBot and ClaudeBot with varying degrees of restraint—often respecting robots.txt directives—Chinese counterparts operate with unbridled aggression, exploiting proxy networks and IP rotation to evade defenses. Bytespider, launched by ByteDance in April 2024, exemplifies this disparity. Research from bot management firm Kasada documents Bytespider scraping at 25 times the rate of GPTBot and a staggering 3,000 times that of ClaudeBot, with activity spiking dramatically over subsequent weeks. This voracious appetite persists despite Bytespider's reputation for ignoring robots.txt, a voluntary standard meant to signal crawler boundaries, rendering it a blunt instrument in an otherwise polite digital ecosystem.

Proxy networks amplify this asymmetry, allowing Bytespider and similar bots like YisouSpider to masquerade as legitimate traffic. A single scraping operation can distribute requests across hundreds or even thousands of IPs, evading rate-limiting and IP-based blocks. Stark Insider reports one such campaign using nearly 800 distinct IPs to execute 1,600 page scrapes in a "quick strike," flying under radar detection tools like Fail2Ban. These networks, often sourced from vast residential proxy pools in data havens like China and Southeast Asia, enable sustained, high-volume extraction without triggering server alarms. The result? A stealthy bombardment that dwarfs human traffic volumes, as arXiv analysis notes scrapers now exceed genuine user activity across the internet.

Economically, this manifests as a death knell for small publishers, who bear the brunt of the bot tsunami. Unlike conglomerates with robust infrastructure, indie news sites, niche blogs, and local media outlets operate on shoestring budgets, hosting on shared servers ill-equipped for the deluge. Aggressive crawlers like Bytespider hammer these sites with requests numbering in the billions network-wide, per Kasada's observations of a 20x surge in activity. Cloudflare data from May 2024 to May 2025 underscores the toll: total crawler traffic rose 18%, with AI bots like GPTBot surging 305%, even as Bytespider's share dipped from 42% to 7% amid countermeasures—yet its absolute volume remained punishing.

Server strain translates directly to bankruptcy. Inflated bandwidth costs skyrocket cloud bills; Peakhour warns that aggressive LLM scrapers degrade performance for real users, causing bounce rates to spike and ad revenue to plummet. A typical small publisher might see bot traffic consume 40-60% of resources once dominated by Googlebot (historically 40% of bot load), now supplanted by AI harvesters. Polite bots like Googlebot or Bingbot announce themselves and throttle politely; Bytespider does not, downloading "the entire internet before lunch" as one analyst quipped. Mitigation attempts—robots.txt blocks, deny lists, or anti-bot solutions—prove futile against proxy-savvy invaders, forcing publishers to invest in expensive defenses like Kasada's "guard dog" systems or Cloudflare's enforceable blocking. For a site with 10,000 monthly humans, absorbing 100,000+ bot hits daily means choosing between survival and surrender: pay up or shutter.

- Cost Cascade: Bandwidth fees alone can exceed $1,000/month for a modest site under Bytespider assault, per extrapolated Kasada metrics.

- Performance Penalty: Latency surges delay page loads, slashing SEO rankings and organic traffic by 20-30%.

- Revenue Ruin: Ad platforms penalize bot-inflated metrics, withholding payments; programmatic ads evaporate as real users flee sluggish sites.

- Exit Strategy: Many small pubs strike data licensing deals with AI firms (e.g., Vox Media with OpenAI), but Chinese giants rarely negotiate, opting for theft over transaction.

This economic predation fuels a broader strategic imbalance. Chinese AI firms, unencumbered by U.S. regulatory scrutiny or ethical norms, amass petabytes of global web data daily, training models that rival or surpass Western counterparts. ByteDance's push, amid TikTok ban threats, signals desperation to stockpile before barriers rise. Geopolitically, control of training data equates to narrative dominance: LLMs ingest cultural, political, and economic corpora, embedding biases and worldviews into outputs. Harvest the English-language web en masse, and you dictate AI's lens on democracy, markets, and history.

Consider the proxy-fueled scale: networks like those powering Bytespider rotate through millions of IPs, sourced from IoT devices, compromised routers, and commercial pools, ensuring 99% evasion rates against basic firewalls. This industrializes scraping, turning the open web into a free-for-all quarry. Western responses lag: GPTBot, the most blocked yet allowed crawler per Cloudflare, still respects opt-outs partially, but enforcement is spotty. Meanwhile, Chinese bots evolve, mimicking browsers (e.g., PerplexityBot's reported tactics) or fragmenting loads to mimic organic surges.

The geopolitical stakes escalate in this asymmetric war. Data sovereignty fractures: small publishers in Europe, the U.S., and beyond subsidize Beijing's AI ascent, their content fueling models deployed in surveillance states or economic espionage. ByteDance's aggression—25x OpenAI's volume—positions China to leapfrog in multimodal LLMs, integrating TikTok's video firehose with scraped text for unbeatable datasets. Economic fallout cascades: bankrupt publishers erode media diversity, amplifying state narratives via surviving giants who license data selectively. Strategically, nations reliant on U.S.-led AI face dependency risks; if Chinese models dominate via superior data volume, global standards shift eastward.

Proxy networks deepen the asymmetry, democratizing offense for state-backed actors. Tools like Scrapy clusters on Kubernetes, augmented by headless Chrome farms, pulse through proxies at terabit scales. Bytespider's 3,000x ClaudeBot ratio isn't anomaly but archetype: Chinese scrapers prioritize quantity, quantity breeds quality through sheer statistical dominance. Small publishers, collateral in this blitzkrieg, fold first—U.S. indie outlets report 50% traffic bot-floods, per anecdotal Stark Insider logs—ceding the web to conglomerates who can afford moats.

Yet hope flickers in countermeasures. Advanced bot defenses parse behavioral fingerprints—mouse entropy, TLS mismatches—bypassing proxies. Cloudflare's 2025 shifts show Bytespider's decline under such pressure, dropping 85% as GPTBot rises. Licensing deals proliferate, with publishers like NewsCorp monetizing opt-ins. Geopolitically, legislation looms: U.S. TikTok mandates signal broader AI data bans, potentially mirroring EU DSA fines for non-compliant scraping.

Ultimately, Layer 4 unmasks the Data War's true angle: an economic siege weaponizing bots to bankrupt the web's underbelly, securing China's geopolitical stranglehold on AI's lifeblood. Small publishers aren't victims; they're the first dominoes in a cascade threatening global information equilibrium. Without symmetric defenses—mandatory proxy transparency, data provenance mandates, international scraping treaties—the asymmetry endures, harvesting not just data, but destiny.

(Word count: 912)

Layer 5: The Future

Layer 5: The Future

In the shadowed aftermath of the Data War, where Chinese AI giants like ByteDance's Douyin algorithms and Alibaba's Tongyi Qianwen models have voraciously scraped the open web, the internet as we knew it in 2026 is on the brink of transformation. This is the era of the 'Dark Forest' Internet—a chilling evolution borrowed from Liu Cixin's cosmic horror in The Dark Forest, where civilizations hide in silence lest they attract predatory hunters. Here, the global web becomes a vast, hostile woodland teeming with invisible stalkers: relentless AI crawlers like ByteSpider, GPTBot, and their Chinese counterparts such as the insidious QianfanBot and HunyuanSpider. These digital predators, powered by proxy networks spanning millions of residential IPs from data centers in Shenzhen to hijacked home routers in rural Virginia, harvest data indiscriminately, fueling the next generation of large language models (LLMs) that outpace Western counterparts in multilingual training data volume. The free open web, once a bountiful commons of blogs, forums, and wikis, is dying—not with a bang, but with a whisper of login walls and paywalls, as creators and platforms retreat into fortified enclaves to evade the harvest.

Predictions for this future are not speculative fiction but extrapolations from current trajectories observed in 2026. By mid-decade, web scraping has escalated into a zero-sum arms race. Tools like Cloudflare's Bot Management and Akamai's Prolexic detect and block bots at scale, but Chinese AI firms, leveraging state-backed resources, deploy sophisticated evasion tactics. ByteSpider, for instance, mimics human browsing patterns with headless Chrome instances routed through rotating proxy pools—think 10 million+ IPs from Luminati-like services rebranded under Chinese conglomerates. These bots don't just scrape; they render JavaScript, solve CAPTCHAs via underground OCR farms, and even simulate mouse movements to bypass behavioral analysis. GPTBot, OpenAI's sanctioned crawler, faces similar blocks, but rogue forks from offshore labs persist. The result? A web where public content yields diminishing returns for AI training, pushing scrapers deeper into aggressive tactics: distributed denial-of-service disguised as scraping swarms, credential stuffing to breach logged-in areas, and even zero-day exploits traded on dark web markets tailored for data exfiltration.

Enter the login walls, the first barricade in this dark forest. Platforms like Medium, Substack, and even Reddit have pioneered "AI-proof" tiers by 2026, requiring user authentication for access. No longer can ByteSpider slurp articles en masse; it must now contend with OAuth flows, two-factor authentication (2FA), and session cookies that expire in minutes. This shift began accelerating post-2024, when publishers like The New York Times sued OpenAI and Microsoft for unauthorized scraping, setting legal precedents that emboldened widespread adoption. By 2027, predict a 70%+ enclosure of high-value content behind logins. News sites will mandate subscriptions not just for revenue, but for control—robot.txt files evolve into dynamic AI-blocklists, cross-referencing user-agents like "Mozilla/5.0 (compatible; ByteSpider)" with geoblocking for CN IPs. Social media giants like X (formerly Twitter) and Meta will enforce "human-only" feeds, using on-device ML models to detect bot-like posting patterns, forcing AI firms to pivot to black-hat methods: buying aged accounts in bulk from Telegram markets or deploying human farms in low-wage regions to manually curate datasets.

Yet login walls are mere skirmishes; the real conflagration brews in the licensing wars. As free data evaporates, AI behemoths—Chinese giants foremost among them—will wage pitched battles over proprietary datasets. Imagine Baidu's Ernie model striking exclusive deals with niche forums, paying $X per gigabyte of scraped archives, while Tencent bids against Google for Reddit's firehose. This mirrors the music industry's shift from Napster-era piracy to Spotify licensing, but amplified: web3 protocols like those from Metalabel's Dark Forest Operating System (DFOS) emerge as battlegrounds. DFOS, envisioned as infrastructure for private internets, enables small groups to host invite-only networks with end-to-end encryption and zero-knowledge proofs, where content licensing is tokenized on blockchains. Chinese firms, with their command economies, could dominate by subsidizing these deals via state funds, cornering markets in non-English corpora—Japanese manga sites, Arabic forums, Hindi wikis—while Western firms fragment into antitrust lawsuits. By 2030, predict a bifurcated web: a "licensed layer" where AI pays premiums for clean, rights-cleared data, and a poisoned public layer riddled with synthetic slop generated by cornered LLMs to deter further scraping.

- Proxy Wars Escalate: Chinese AI labs deploy next-gen proxies blending residential IPs with 5G/6G edge computing, evading blocks via ML-driven fingerprint rotation. Tools like Scrapy clusters integrated with Bright Data proxies scale to petabytes daily, but face counterstrikes from decentralized botnets like those powered by libp2p.

- Regulatory Flashpoints: EU's AI Act mandates data provenance audits, crippling non-compliant Chinese scrapers; U.S. follows with CFIUS blocks on proxy providers. Yet, circumvention thrives via Hong Kong shells and Southeast Asian relays.

- Human-in-the-Loop Renaissance: Platforms hire moderators en masse to flag AI content, birthing a gig economy of "data curators" verifying authenticity.

The death of the free open web is the inexorable outcome. Once a utopian expanse echoing Web 1.0's ethos of open access, it now embodies Yancey Strickler's dark forest: a battleground overrun by power-seekers—AI firms harvesting for market dominance, advertisers for surveillance capitalism, nation-states for propaganda mills. Public spaces like Wikipedia strain under bot edits reversed by AI-savvy admins, while forums like Stack Overflow ban scrapers outright, their APIs now metered at enterprise rates. The masses, weary of bot spam and deepfake trolls, flee to dark forests: Discord servers with 2,000-member caps verified via video selfies, Signal groups with disappearing messages, and nascent fediverse instances requiring PGP keys. These are the cozywebs—non-indexed, non-gamified havens where conversation breathes freely, untainted by ByteSpider's gaze. As Charlotte Dune notes, it's humans relocating to invite-only bunkers, lest sexbots, fake avatars, and Viagra ads (now AI-personalized hallucinations) overrun the wilds.

Projections paint a grim mosaic. By 2028, 80% of English web content could be AI-generated noise—deliberate "data poison" via tools like Nightshade, embedding imperceptible perturbations that corrupt training sets. Chinese giants, undeterred, pivot to multimodal harvesting: video from Bilibili clones, audio from Clubhouse successors, all funneled through edge proxies in Africa and Latin America to skirt sanctions. Licensing cartels form: a "Data OPEC" of publishers dictating terms, with ByteDance as the Saudi Arabia equivalent, leveraging scale to undercut prices. Yet cracks appear—whistleblowers leak scraper codebases, open-source communities build anti-AI meshes like Tor-hidden services scaled for mass use. The Dark Forest Operating System matures into full-fledged private nets, where groups self-host wikis on IPFS with proof-of-humanity via Worldcoin orbs or fractal IDs.

This future demands adaptation. Creators thrive in micropatronage: Patreon evolves into AI-shielded vaults, dispensing content via ephemeral streams. Search engines fragment—Perplexity's successors crawl only licensed realms, while dark forest aggregators like bespoke RSS daemons pull from trusted silos. The psychological toll mounts: a generation raised in echo-chambers of verified kin, distrusting the public web as hunter-haunted. Game theory underpins it all—sequential games of incomplete information where broadcasting data invites destruction by resource-hungry AIs. Silence becomes survival strategy; deception, the norm. Obfuscation tools proliferate: dynamic text via CSS sprites, image steganography hiding payloads that scramble scrapers.

Yet glimmers persist. A counter-movement— the "Lit Forest Alliance"—advocates PBS-style public spaces: ad-free, human-curated hubs funded by endowments, resilient to bots via novel interaction proofs (e.g., collaborative storytelling CAPTCHAs). Chinese AI giants, facing domestic data famines from Great Firewall ironies, may even join licensing pacts, birthing a hybrid web where data flows controlled but abundant. Still, the open web's corpse cools. In this autopsy of the Data War, Layer 5 foretells not apocalypse, but metamorphosis: from boundless prairie to labyrinthine woods, where only the wary thrive, proxies whisper, and the hunt never ends.

(Word count: 912)