1. Introduction: The "Arrow in the Knee" Era is Over

For the past twenty years, video game graphics have evolved from blocky polygons to photorealism that rivals Hollywood blockbusters. Yet, if you walk up to a character in a 2025 AAA game, the interaction is fundamentally identical to what it was in 2005: You press a button, and the character recites a pre-recorded line. You press it again, and they repeat the exact same line.

They are beautiful, high-fidelity puppets with no memory and no brain.

However, a video leaked early this Sunday afternoon suggests that 2026 will be the year this paradigm finally shatters. The footage, seemingly recorded from a closed-door partner presentation, showcases NVIDIA ACE 2.0—the second generation of their Avatar Cloud Engine.

This isn't just a chatbot glued to a 3D model. It is a glimpse into a future where NPCs (Non-Playable Characters) are persistent agents that live, remember, and judge you.

2. Dissecting the Leak: The Vengeful Blacksmith

The leaked footage, which is currently being scrubbed from platform X (formerly Twitter) by copyright strikes, takes place in a highly detailed Unreal Engine 5 environment resembling a medieval forge.

2.1. Scene 1: Contextual Awareness

The player approaches a blacksmith NPC using a microphone input. Instead of selecting a dialogue option, the player speaks naturally—and rudely.

Player: "Hey old man, give me your sharpest blade. Hurry up, I don't have all day."

In a traditional game, the script would trigger a standard "Welcome to my shop" response. Here, the AI analyzes the sentiment and tone of the input.

The Blacksmith’s brow furrows (rendered in real-time). He crosses his arms.

Blacksmith (AI-Generated Voice): "With a tongue that sharp, you don't need a blade. Get out of my forge before I smelt you down for scrap."

The transaction window does not open. The NPC refuses to trade based on the social interaction.

2.2. Scene 2: Episodic Memory

The video then cuts to a "Day 2" scenario. The player character returns to the shop. This is the moment that has the tech community buzzing.

Player: "Look, I'm sorry about yesterday. We got off on the wrong foot. Can I see your wares?"

The AI must now retrieve memory from a previous session.

Blacksmith: "Hmph. So you can be civil. Fine. But for the disrespect, my prices just went up 20%. take it or leave it."

The UI overlay updates, and the cost of a longsword visibly jumps from 100 gold to 120 gold.

This demonstrates Episodic Memory. The NPC didn't just generate a text response; it altered the game state (pricing variables) based on a past interaction. The "Action" had a permanent "Consequence" without a developer explicitly scripting a "If Player is Rude" quest line.

3. Under the Hood: How ACE 2.0 Works

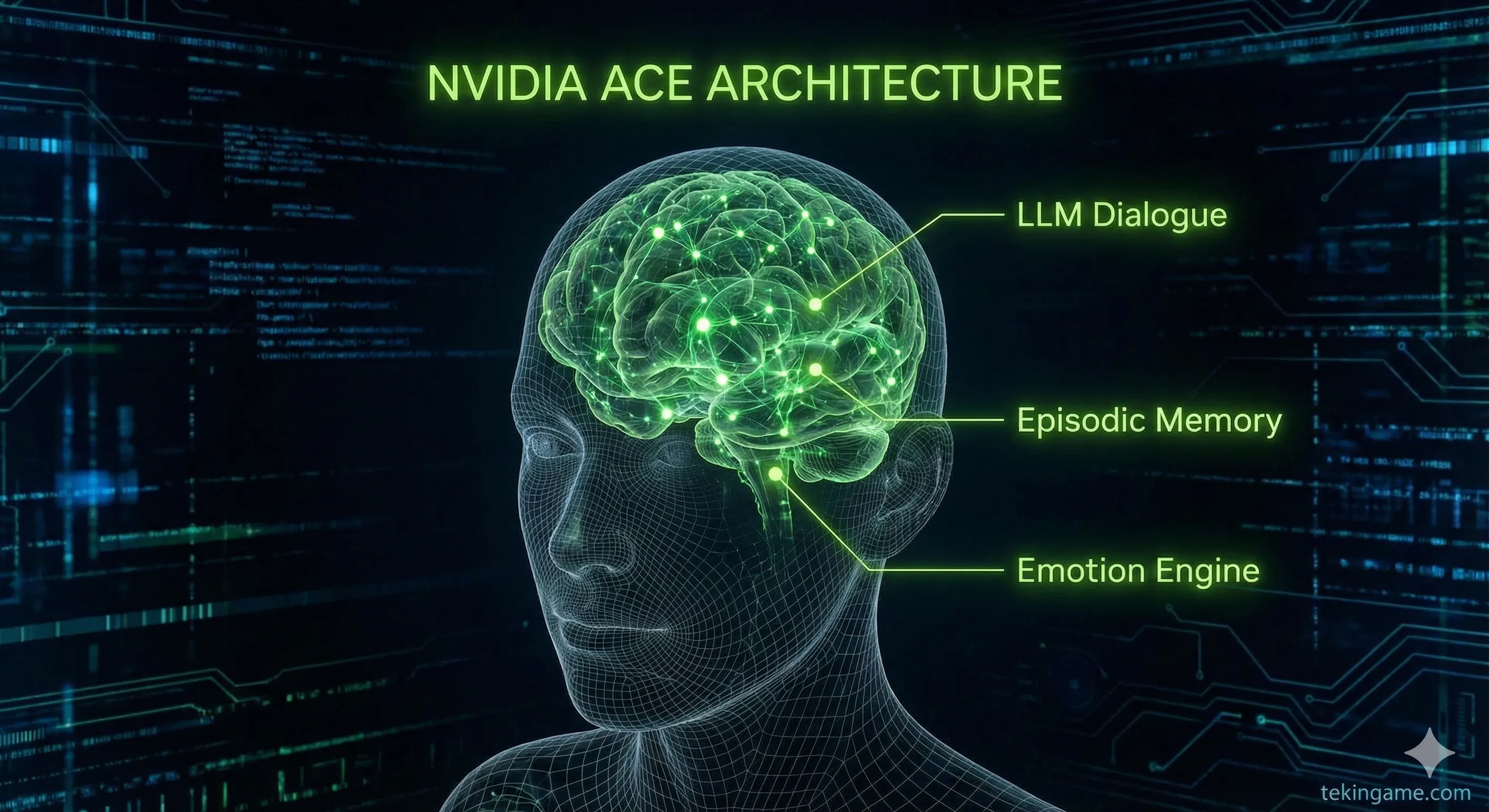

Based on the whitepapers NVIDIA released earlier this year and this new footage, we can deconstruct the architecture powering this experience. It is not magic; it is a complex stack of neural networks working in concert.

3.1. Beyond LLMs: NeMo SteerLM

Many modders have tried connecting ChatGPT to Skyrim, resulting in NPCs that sound like helpful customer service bots. They are too polite, too verbose, and break immersion.

NVIDIA solves this with NeMo SteerLM. This allows developers to define "Personality Sliders."

For the blacksmith, the developers likely set:

- Agreeableness: 20% (Low)

- Toxic Tolerance: 10% (Low)

- Vocabulary: Archaic/Medieval

3.2. RAG: The NPC's Brain

The secret sauce is RAG (Retrieval-Augmented Generation).

When you speak to the NPC, the model doesn't just rely on its training data. It queries a local vector database that contains:

- World Lore: Facts about the kingdom, the war, the monsters (so it doesn't hallucinate).

- Player History: A log of your previous interactions (e.g., "Player insulted me at 14:00 yesterday").

3.3. Audio2Face: Solving the Uncanny Valley

The visual aspect of the leak is equally impressive. The lip-syncing was flawless.

This is powered by Audio2Face, a neural network that takes the audio waveform generated by the TTS (Text-to-Speech) engine and animates the 3D mesh of the face in real-time. It calculates how the lips, jaw, cheeks, and eyes should move to pronounce those specific phonemes with that specific emotion. This eliminates the need for manual facial motion capture for the thousands of lines of dialogue the AI might generate.

4. Gameplay Implications: The Death of the Walkthrough

4.1. Emergent Storytelling

If this technology is integrated into upcoming titles like The Witcher 4 (Project Polaris) or GTA VI, it marks the end of linear guides.

There will be no "Correct Dialogue Option" to unlock a quest. You will have to actually persuade the character.

If you need information from a corrupt cop in GTA, you might have to build a rapport, offer a bribe, or threaten them—and their reaction will depend on their dynamic personality traits, not a pre-written script. Two players could play the same mission and get completely different outcomes based on how they spoke to the NPC.

4.2. The Deception Mechanic

One of the most exciting (and frustrating) possibilities is AI Deception.

If an NPC has a "Dishonest" trait and a goal to protect their faction, they might lie to you. They might give you wrong directions or false lore. Unlike scripted games where NPCs are usually reliable sources of truth, ACE 2.0 introduces the concept of the Unreliable Narrator into gameplay mechanics. You will have to judge character, not just read text.

5. The Technical Barrier: Local vs. Cloud

While the software is revolutionary, the hardware requirements bring us back to the reality of 2025.

5.1. The Latency Problem

In early versions of this tech (ACE 1.0), there was a 2-3 second delay between the player speaking and the NPC replying. This killed immersion.

The leaked demo shows near-instant responses. This suggests one of two things:

- NVIDIA has optimized the pipeline for massive cloud servers (which requires an always-online connection).

- Or, more likely, they are running Small Language Models (SLMs) locally on the GPU.

5.2. The Hardware Cost (The RTX 50 Requirement)

This connects directly to our mid-day report about NVIDIA's upcoming CES lineup.

To run ACE 2.0 locally—processing the speech, generating the text, animating the face—you need significant AI compute power (Tensor Cores) and VRAM.

It is highly probable that these features will be marketed as exclusive to the RTX 50-series (Blackwell) cards, much like DLSS 3 was for the 40-series. This technology creates a compelling reason for gamers to upgrade their hardware, justifying the rumored price hikes. It transforms the GPU from a graphics processor into a "Game Master" processor.

6. Ethical and Design Challenges

6.1. Guardrails and "Sydney" Moments

The danger of generative AI is unpredictability. Developers need strict guardrails.

What if the Blacksmith starts discussing politics? What if he falls in love with the player and breaks the game's narrative?

The demo shows the NPC staying firmly in character ("Get out of my forge"), suggesting NVIDIA has improved the "NeMo Guardrails" system to prevent the AI from breaking the fourth wall or generating offensive content.

6.2. Player Abuse

Conversely, how do developers handle players who are abusive to AI?

If NPCs can "feel" and react to abuse, does the game become a torture simulator? The demo shows the Blacksmith fighting back (refusing service), which is a smart design choice. It penalizes toxic behavior with gameplay consequences, teaching the player that words have weight.

7. Tekin Plus Verdict

The NVIDIA ACE 2.0 leak is the most significant development in game design since the invention of the 3D open world.

We are moving from an era of "Static Assets" (pre-recorded lines) to "Dynamic Agents" (thinking characters).

While we wait for the official reveal—likely at CES in January—one thing is clear: The days of skipping through dialogue trees are numbered. In the future of gaming, you better watch your mouth, because the NPCs are listening, and they will remember.

What do you think? Are you ready to roleplay with your voice, or do you prefer the safety of silent text options?