1. The Friction of Language: Why "Prompts" are Obsolete

Human language is linear, limited, and often ambiguous. An image is worth a thousand words, yet we are forced to compress that image into a 50-word text prompt. In the current era of v6, we have become "Prompt Engineers," learning to speak to the machine with syntax like --ar 16:9 --stylize 500. This is engineering, not pure art.

The philosophy behind the rumored Midjourney v7 architecture is the removal of the "middleman." If the AI can interface directly with the Visual Cortex of the brain, it bypasses the need for vocabulary. It doesn't need you to describe "sadness"; it detects the neural signature of sadness and renders the color palette accordingly.

2. Project Telepathy: How Mind-to-Image Works

This might sound like magic, but it is grounded in neuroscience. When you imagine an apple, your brain fires a specific electrical pattern. To a machine, this is just encrypted data waiting to be decoded.

The 3-Step Process:

- Signal Acquisition (EEG/fMRI): Sensors read the electromagnetic activity of the brain.

- Semantic Decoding: A Large Language Model (LLM) or Diffusion Model maps these brain signals to semantic concepts (e.g., Signal X = "Red", Signal Y = "Round").

- Visual Reconstruction: The AI generates the image based on these decoded signals, refining it in real-time as your brain reacts to the output.

Rumors suggest Midjourney is collaborating with Neurotech startups to create a model that accepts these neural signals as "Input Prompts" alongside text.

3. The Scientific Proof: When AI Decoded Dreams

This is not theoretical. Between 2023 and 2025, researchers at the University of Texas and the National University of Singapore achieved breakthroughs using fMRI machines and models like Stable Diffusion.

In one famous study (Project MinD-Vis), subjects were shown images of landscapes and animals while inside an MRI scanner. The AI, reading only their brain data, reconstructed the images with 85% semantic accuracy. If a subject looked at a teddy bear, the AI drew a teddy bear. Midjourney v7 aims to be the first commercial application of this science, moving it from the lab to the consumer.

4. The Hardware Gap: Do We Need a Neuralink?

Relax, you likely won't need Elon Musk to drill a chip into your skull to use Midjourney v7. The focus is currently on Non-Invasive BCI.

- Next-Gen Wearables: Devices like the Apple Vision Pro or future iterations of the Meta Quest are packed with sensors. While they primarily track eyes, advanced EEG bands can be integrated into the head straps to read surface-level brain activity.

- Eye-Tracking as a Bridge: Before full telepathy, v7 will likely use "Gaze-Based Inpainting." You look at a part of the image you don't like, frown slightly, and the AI automatically regenerates that specific section.

5. The Neuro-Rights Crisis: Who Owns Your Subconscious?

This is where the dream turns into a potential nightmare. If an AI can read your mind to generate art, what else is it reading?

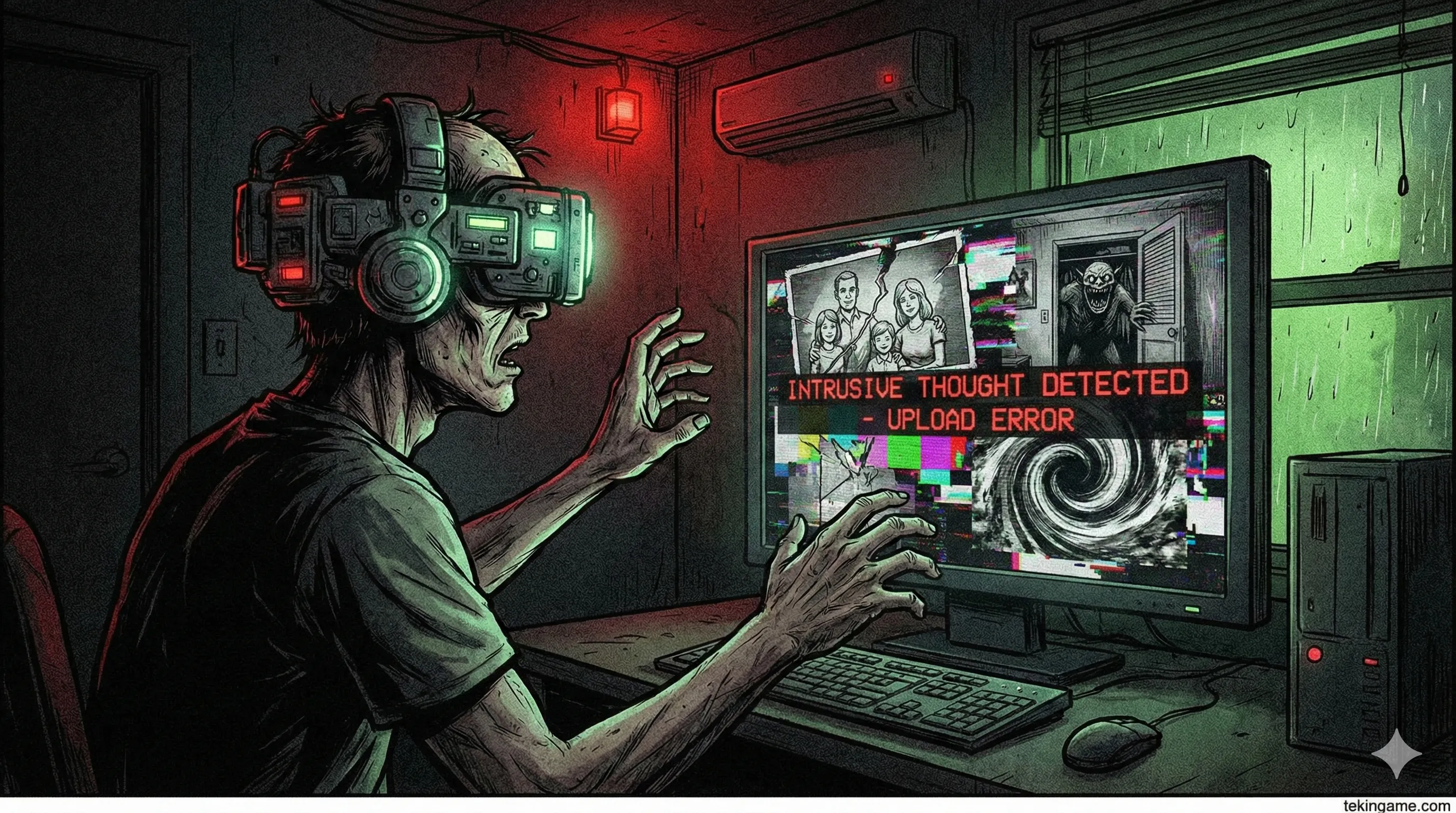

Imagine you are trying to generate a landscape, but your subconscious mind flashes a private memory, a fear, or an intrusive thought. Does the AI render that, too? The issue of "Neurorights"—the right to mental privacy—will be the defining legal battle of the late 2020s. If Midjourney collects your "Brain Prompts," are they stored on a cloud server? Can they be hacked?

6. Midjourney v7: Evolution or Revolution?

Will v7, expected in mid-2026, be fully telepathic? Likely not yet.

TekinGame predicts that v7 will introduce a "Hybrid Interface." It will still rely on text for the main concept, but will use biometric feedback (via smartwatches or headsets) to adjust the vibe. If your heart rate is high, the image becomes more chaotic. If you are calm, the lighting becomes softer. It is the first step toward the Matrix.

7. TekinGame Verdict: From "Prompt Engineer" to "Dream Architect"

The rumors of Mind-to-Image technology in Midjourney v7 represent the final frontier of art. The day this technology matures, "Prompt Engineering" will die. The only skill that will matter is the clarity of your imagination.

In that future, the greatest artists will be the ones who can dream the most lucidly. But until that day comes, we must stick to our keyboards and Discord servers, hoping that our internet connection stays stable enough to render one more grid.

🧠 Would You Upload Your Thoughts?

If Midjourney released a headset that could visualize your thoughts instantly, would you wear it? Or is the risk to your privacy too high? Let us know in the comments.