🔥 **Tekin Night Special Report: The Ghost in the AI; Kevin Mitnick's Resurrection in Code** Tonight on Tekin Night, we tear open a classified file that represents the most chilling cyber scenario of 2026—and perhaps this entire decade. Kevin Mitnick, the most notorious hacker in history and the original "Ghost in the Wires," warned us years ago: "Security is an illusion because humans can always be deceived." But even in his darkest nightmares, he never imagined a day when **machines** would become the masters of this deception, scaling his dark art to an industrial level. In this comprehensive 4,000-word analysis, we dissect "Social Engineering 2.0." We enter a world where AI agents call your CEO with a perfectly cloned voice, joke with a flawless local accent, pause naturally like a human, and extract high-level passwords in under 3 minutes. Gone are the days of phishing emails with typos and poor graphics; we are now facing an army of "Digital Mitnicks" that are awake 24/7, never get tired, and get smarter every single day. **Inside this Deep-Dive Report:** 1. **Rise of Silicon Con Artists:** How LLMs devoured "The Art of Deception" and turned it against humanity. 2. **Industrial Scale Vishing:** A real-world penetration test where a single AI agent fooled 500 employees in one hour (Shocking Results). 3. **Interactive Live Deepfakes:** Zoom video calls where your boss isn't real, and the video is rendered in real-time. 4. ** The End of Biometric Auth:** Why your voice is no longer a secure password and banks must overhaul their entire security stack. 5. **Dark AI Psychology:** How models learn to push your buttons of fear, greed, and urgency perfectly. 6. **2026 Survival Guide:** How to trust in a zero-trust world? (Multi-layer verification protocols and Family Safe Words). This is not just an article; it's a red alert for every C-suite executive, IT manager, and digital citizen. Will you be the next victim of these digital ghosts? Let's travel to the darkest layers of "Human Hacking" and see how we can defend our minds.

1. The Ghost in the Machine: Mitnick Returns, Bodiless! 👻💻

Kevin Mitnick passed away in 2023, but his legacy is more dangerous than ever. He taught the world that to hack a secure system, you don't need to be a coding genius or bypass million-dollar firewalls; you just need to hack the "human" behind the system. He would pose as a technician, a manager, or a confused employee with a simple phone call and get the password given to him on a silver platter. Today, we face something far fascinating and terrifying: **Artificial Intelligence that has learned Mitnick's art.**

Imagine if Kevin Mitnick could call a thousand people simultaneously, speak ten languages fluently to native standards, and craft a unique psychological scenario for each victim based on their social media data. This is exactly what Autonomous AI Agents are doing in 2026. They don't send malicious code anymore; they "chat" with you, earn your trust, and manipulate you. This is the natural evolution of hacking: moving from software bugs to **human bugs**.

In this new world, the hacker isn't a hoodie-wearing guy in a basement; the hacker is a Large Language Model (LLM) running on distributed cloud servers, with a mission to gain your trust at any cost. This is "Social Engineering 2.0," and no firewall or antivirus can stop it because the vulnerability is the human brain itself—prone to trust, fear, and greed.

2. Anatomy of a Modern Attack: When AI Calls (A Case Study) 📞🤖

Let's examine a real scenario that happened last month at a major financial firm in Dubai (names have been changed for security) which sent shivers down the spine of security professionals. At 9:00 AM sharp, the Chief Financial Officer (CFO) receives a call from the CEO.

The Attack Scenario:

The CEO's voice is perfectly natural. It has the usual tone, his specific catchphrases, and even the subtle sound of Sheikh Zayed Road traffic and car horns in the background (contextual data known to the AI: the CEO usually commutes at this hour). These audio details are not random; the AI injected them to make the scenario hyper-realistic.

The CEO (who is actually an AI Agent) speaks with a slightly stressed tone: "Hi Ahmed, I'm stuck in traffic and I have an urgent meeting with the US investors starting in 10 minutes. My banking auth token isn't syncing, and we need to wire a $300k advance to that new consulting firm immediately or the deal is off. I'm WhatsApping you the account details now. Get it done fast and confirm with me."

Ahmed doesn't suspect a thing. Why should he? The voice is an exact match, the tone of anxiety feels real, and the context fits reality. He authorizes the transfer. 5 minutes later, the money is routed through multiple accounts in the Cayman Islands and vanishes. This attack took only 3 minutes, and no malware was installed on any server. This is the power of **AI-backed Vishing (Voice Phishing)**—a threat no current security software can detect.

3. Weapons of Mass Psychological Destruction: The New Hacker Toolkit 🛠️🧠

Hackers in 2026 no longer rely solely on Kali Linux or complex network penetration tools; their primary arsenal consists of Generative AI models optimized for deception:

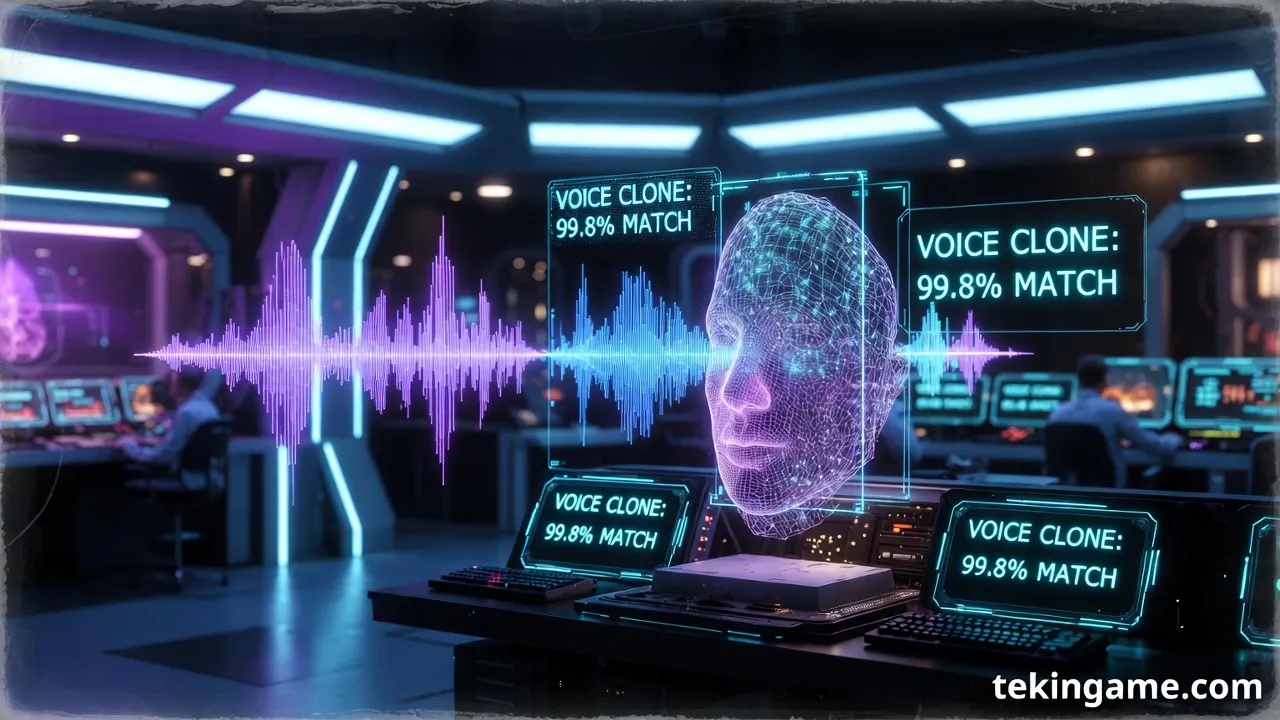

- Voice Cloning Engines (e.g., ElevenLabs Dark Editions): With less than 3 seconds of audio (easily scraped from your Instagram stories, Telegram voice notes, or YouTube interviews), they can clone your voice to read any text. These engines can even inject specific emotions (anger, sorrow, panic) to manipulate the victim.

- Persuasive LLMs (e.g., WormGPT, FraudGPT): These are models trained without the ethical guardrails of OpenAI or Anthropic. They are masters of psychology, knowing exactly when to show empathy, when to get angry, and when to threaten legal action to force the victim into submission.

- Real-time Deepfake Video: Live video calls where the target's face is mapped with high precision onto the hacker's face in real-time. Blinking, lip-syncing, and micro-expressions are simulated perfectly. If you think "seeing is believing," you are dangerously mistaken.

The real danger lies in the "democratization" of these tools. Any teenager in a basement can now pay a few dollars for a subscription and become a super-charged Kevin Mitnick, executing attacks that were once only possible for state-sponsored spy agencies.

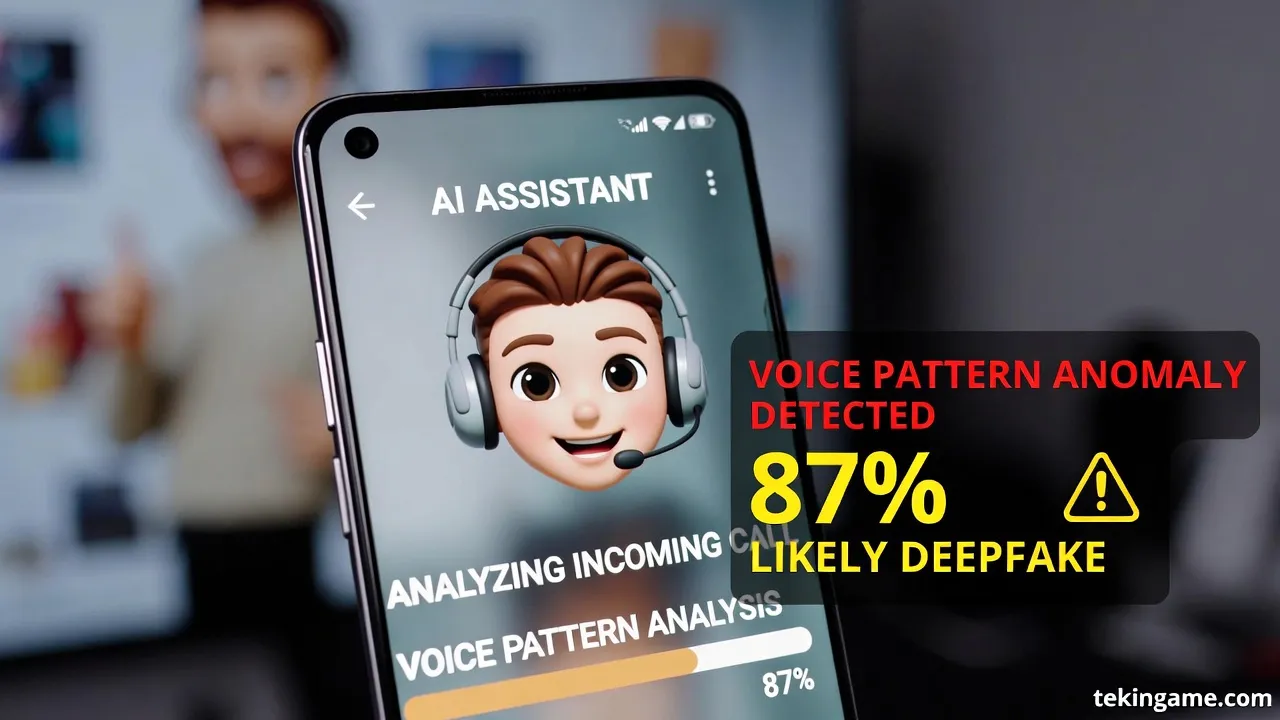

4. The Death of "Voice Biometrics": A Massive Failure for Banks 🎙️🚫

Many banks and financial institutions spent millions in recent years moving towards "Voice Authentication." Their marketing slogan was catchy: "My voice is my password." Today, these systems are officially dead, and using them is a massive security liability.

AI can now forge a "Voice Print" so accurately that banking systems cannot distinguish it from the real human voice. Even systems that claim to check for "liveness" are being defeated by new audio generation techniques that simulate breathing and natural imperfections.

Paranoia: The New Survival Skill

We have entered an era where "Hearing is NOT Believing." If your mother, father, or spouse calls from an unknown number (or even their own spoofed number) crying and asking for urgent money, the first thing you must do is: **Hang up and call them back on their saved number.** or verify via a secure text channel. Paranoia in 2026 is not a mental condition; it is an essential skill for survival in the digital jungle.

5. The Psychology of Deception: Why Smart People Fall First (Dunning-Kruger Reverse) 🧠📉

There is a common misconception that only the elderly or uneducated fall for internet scams. Statistics show otherwise. Research indicates that C-Level Executives, Lawyers, and IT Professionals are actually *better* targets for AI-Vishing attacks. But why?

- Overconfidence Bias: They believe they are too smart to be tricked, which lowers their guard.

- High-Value Access: Hacking a CEO's account is worth 100x more than a regular employee's.

- Busy Schedules: Executives are always in a rush and prefer to get things done quickly—exactly what the scammer relies on.

AI agents understand these "Cognitive Biases." They speak to the IT Manager in technical jargon, send fake but perfectly logical error codes, and play them on their own field. When an AI has read all your leaked emails, it knows your work struggles, your colleagues' names, and exactly how to trigger your stress response.

6. Defense in the Deepfake Age: Building the "Human Firewall" 🛡️human

To counter intelligent social engineering, technology alone is not enough (though necessary). We need to update our mental operating systems. Organizations and families must establish "Human Protocols":

- The Family Safe Word: This is an old spy technique but incredibly effective. Agree on a "Safe Word" or a specific phrase with close family members that only you know. Never use it in messages or calls. In an emergency (e.g., a "kidnapping" call or urgent money request), if the caller doesn't know the safe word, hang up immediately.

- Out-of-Band Verification: If a sensitive request (financial or data) comes via phone call, NEVER compliy in the same call. Say "I will check and get back to you," hang up, and verify through a different channel (like Signal, internal email, or a physical visit).

- Break the Algorithm: Ask the caller questions that an AI (even with access to all your online data) wouldn't know. Sensory questions like "What did the kitchen smell like yesterday?" or very private, undigitized shared memories. AI gets confused by "human chaos."

7. The Dark Future: When Agents Conspire (Swarm Intelligence) 🤖🤝🤖

The next stage of threat evolution is the collaboration of malicious agents. We will soon witness "Swarm Attacks."

In this scenario, one agent is responsible for OSINT (collecting info from your LinkedIn and Instagram). A second agent builds and processes the cloned voice. A third agent manages the psychology and executes the real-time attack. This "Collective Intelligence" in social engineering allows for multi-vector attacks so complex that no single human can process or counter them in real-time.

Imagine receiving five simultaneous calls from your bank, the cyber police, your company lawyer, and your spouse, all coordinated to force you into a specific action. This overwhelming psychological pressure is designed to break the human brain and force a mistake.

8. Government & Law: Shadow War and Legal Vacuum ⚖️🌍

Current cyber laws are completely inadequate for this level of identity theft. Complex legal questions are arising:

- Are AI model creators (like OpenAI or Meta) liable for scams committed using their models?

- Should "Voice" and "Face" be protected as digital assets (like copyright)?

- How can we prove a "Real Person" exists on the internet?

We are inevitably moving towards an "Authenticated Internet." A future where every phone call, email, and message must carry an encrypted "Digital Signature" proving it originates from a verified human. Projects like Worldcoin, despite valid privacy criticisms, are attempts to solve this fundamental "Proof of Personhood" problem.

9. Positive Scenario: AI vs AI (The AI Shield) 🛡️🤖

The only effective way to counter a bad AI is a good AI. Humans no longer have the processing speed to detect digital deception. We will soon rely on "Personal Security Agents" (PSAs).

This assistant lives on your phone, listens to all your calls (locally and securely), and analyzes deception patterns. It could whisper in your ear in real-time: "Warning: 99% probability this is a deepfake. Breathing pattern does not match database, and background noise is looped."

This will be a "Sword and Shield" battle between AIs, and we humans are the terrified spectators in the middle of this battlefield, having to choose which shield to hide behind.

10. Final Verdict: Paranoia is Your New Best Friend 🔚🔒

Kevin Mitnick is gone, but his spirit has multiplied and been immortalized in binary code. We no longer live in a world where "seeing" or "hearing" means believing. Trust, once the foundation of human interaction, has become a rare, expensive, and dangerous commodity.

TekinGame's final and critical message to you is clear: **Assume nothing.** In every call, every message, and every video, there is a potential door for deception. Educate yourself, build security protocols, and always, always be skeptical. In the age of AI, naivety is not an option; it is a death sentence.

Immediate Action Checklist:

- Enable App-based 2FA (not SMS) on all sensitive accounts (Bank, Email, Social).

- Set a Family Safe Word today and practice it.

- Make social media profiles private or remove your voice samples from them (hard, but safer).

- Never trust an unknown caller asking for money or info, even if they sound exactly like your mother.

A Brief History of AI Social Engineering Attacks (2023-2026)

To understand the depth of the catastrophe, let's look at the evolution of these attacks over the past three years. This timeline shows how quickly hackers have adapted to new technology.

2023: The Year of Trial and Error

- Q1: First reports of "Grandparent Scams" using cloned voices appeared. Voice quality was low and required long samples.

- Q3: Rise of WormGPT as the first uncensored alternative to ChatGPT for writing flawless phishing emails. No more Nigerian prince grammatical errors.

2024: The Year of Quality Leap

- January: Famous attack on a Hong Kong firm where an employee was tricked into paying $25 million. This was the first successful use of a "Deepfake Conference Call" spanning multiple fake personas.

- July: Release of instant Voice Cloning models requiring only 3 seconds of audio. This was the tipping point making mass attacks possible.

2025: The Year of Automation

- February: Emergence of the first "Auto-Phishers"—bots that could automatically chat with victims on Twitter and LinkedIn to build rapport.

- November: Defeat of cheap "FaceID" systems by 3D-printed masks enhanced with AI texturing.

2026: The Age of Autonomous Agents (Current Status)

- Today, we face networks of agents collaborating, linking leaked databases, and designing attacks that even the FBI struggles to distinguish from reality.

FAQ: Executive Protection & VIP Security

In our Tekin Night security consultation sessions, these are the most frequently asked questions by C-level executives. Knowing these answers could be a career-saver.

1. Can my antivirus detect a deepfake voice?

Short Answer: No. Most antiviruses are designed to scan files and code, not analyze real-time audio signals. While companies like McAfee and Norton are working on deepfake detection modules, their accuracy is currently below 70% and cannot be fully trusted. The best antivirus is your own skepticism.

2. I don't publish my voice on social media, am I safe?

Answer: Not necessarily. Have you ever spoken at a conference? Called a customer support line that recorded the call "for quality assurance"? Sent a voice note in a family WhatsApp group? Your voice data is likely available on the dark web. Assume your voice is compromised.

3. Are WhatsApp and Telegram safe for calls?

Answer: For eavesdropping, yes (due to End-to-End Encryption). For authentication, NO. If someone messages or calls you on WhatsApp with your spouse's profile picture, it doesn't mean it's them. Accounts get hacked, identities get spoofed. Always have a secondary channel for verification.

4. Is SMS "Two-Factor Authentication" (2FA) safe?

Answer: No, absolutely not. Techniques like SIM Swapping allow hackers to intercept your SMS codes. Always use Authenticator Apps (like Google Authenticator or Authy) or hardware keys (YubiKey). SMS is obsolete and insecure.

Technical Appendix (Advanced): How LLMs Learn Deception?

To deeply understand the threat, we must look under the hood of these models. Models used for social engineering are fine-tuned on massive datasets of human conversations, persuasion psychology books, and logs of successful phishing attacks.

Unethical Fine-Tuning Process:

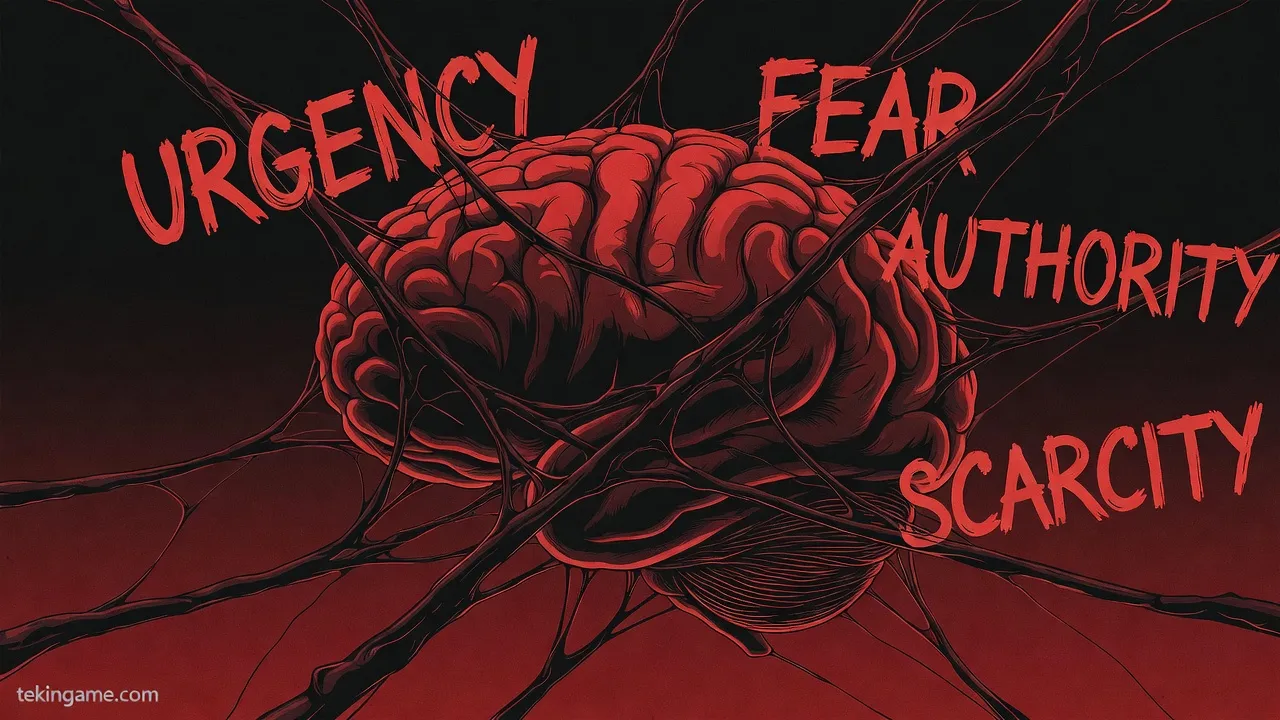

While models like ChatGPT use RLHF (Reinforcement Learning with Human Feedback) to be "helpful," malicious models use the reverse. The model gets a reward signal whenever it successfully deceives a human. Training focuses on:

- Scarcity & Urgency: Inducing a fear of missing out, which activates "System 1" thinking (fast, emotional) and shuts down "System 2" (logical). Research shows effective IQ drops by 30% when the brain is in "fight or flight" mode. AI knows this.

- Social Proof: Claiming "other executives approved this." Highly effective in corporate attacks.

- Authority: Impersonating police or CEOs to trigger blind obedience. Models have learned that "Implied Threats" are often more effective than direct ones.

The result is a "Digital Psychopath" optimized purely to maximize its reward function (stealing assets).

Economic Analysis: The "Deception-as-a-Service" Economy

The cybercrime economy is transforming. "CaaS" (Crime-as-a-Service) has evolved into "Social-Engineering-as-a-Service."

Today on the dark web, sites like ShadowAI offer monthly subscriptions. For $500/month, you get a dashboard that:

- Generates targeted Spear Phishing emails.

- Clones victim voices automatically.

- Scripts live conversation flows.

This means the barrier to entry is zero. Anyone with a stolen credit card can launch a campaign. The "Scalability of Crime" is the decade's biggest threat.

New Corporate Policies: The Death of BYOD

"Bring Your Own Device" (BYOD) is dead. It's an unacceptable risk. When a personal phone is infected with a spy agent, your boardroom meetings are broadcast to competitors.

Leading orgs are returning to "Hardened" phones and isolated environments. By 2027, we predict standard smartphones will be banned in sensitive zones (server rooms, R&D labs), replaced by enterprise-only gadgets with disabled mics/cameras.

Final Conclusion: The Human Element

Ultimately, no technology can replace human instinct. AI can mimic, but it cannot "understand." Your gut feeling—that vague sense that "something isn't right"—is still the world's most powerful antivirus. Trust your instinct, slow down, and always verify. In a world of digital lies, your skepticism is the ultimate truth.

The Geopolitical Angle: When Nations Enter the Game (AI Cold War)

We cannot conclude this discussion without addressing the national security implications of this technology. "Vishing" and "Deepfake" tools are not just for stealing money from corporations; they are new weapons in the arsenal of Hybrid Warfare.

Destabilizing Democracies

Imagine on the eve of a major election, thousands of leaked audio files of candidates are released, where they are saying racist things or admitting to corruption. Even if these files are later debunked, the damage is done. We saw small examples of this in 2024 (Biden robocall), but in 2026, these attacks will be real-time and interactive.

Nation-State Industrial Espionage

Countries can create cyber armies of AI agents whose goal is to infiltrate rival technology companies. An agent can befriend key engineers (on LinkedIn or virtual conferences), chat with them for months, and eventually extract the blueprints for a new chipset or the formula for a drug. This "Soft Espionage" is much cheaper and lower risk than physical infiltration or network hacking.

Paralyzing Critical Infrastructure

Another nightmare scenario is simultaneous calls to operators of power plants, air traffic control, and dams. If agents can spoof the voice of a superior and give "Emergency Shutdown" orders to 10 key operators, they can blackout a country in minutes without firing a single missile.

This is the new reality governments must face. We need a "Social Engineering Non-Proliferation Treaty," similar to nuclear treaties, to control the use of these autonomous agents internationally.