1. Introduction: Confessions of a Webmaster

Hello friends, Majid here.

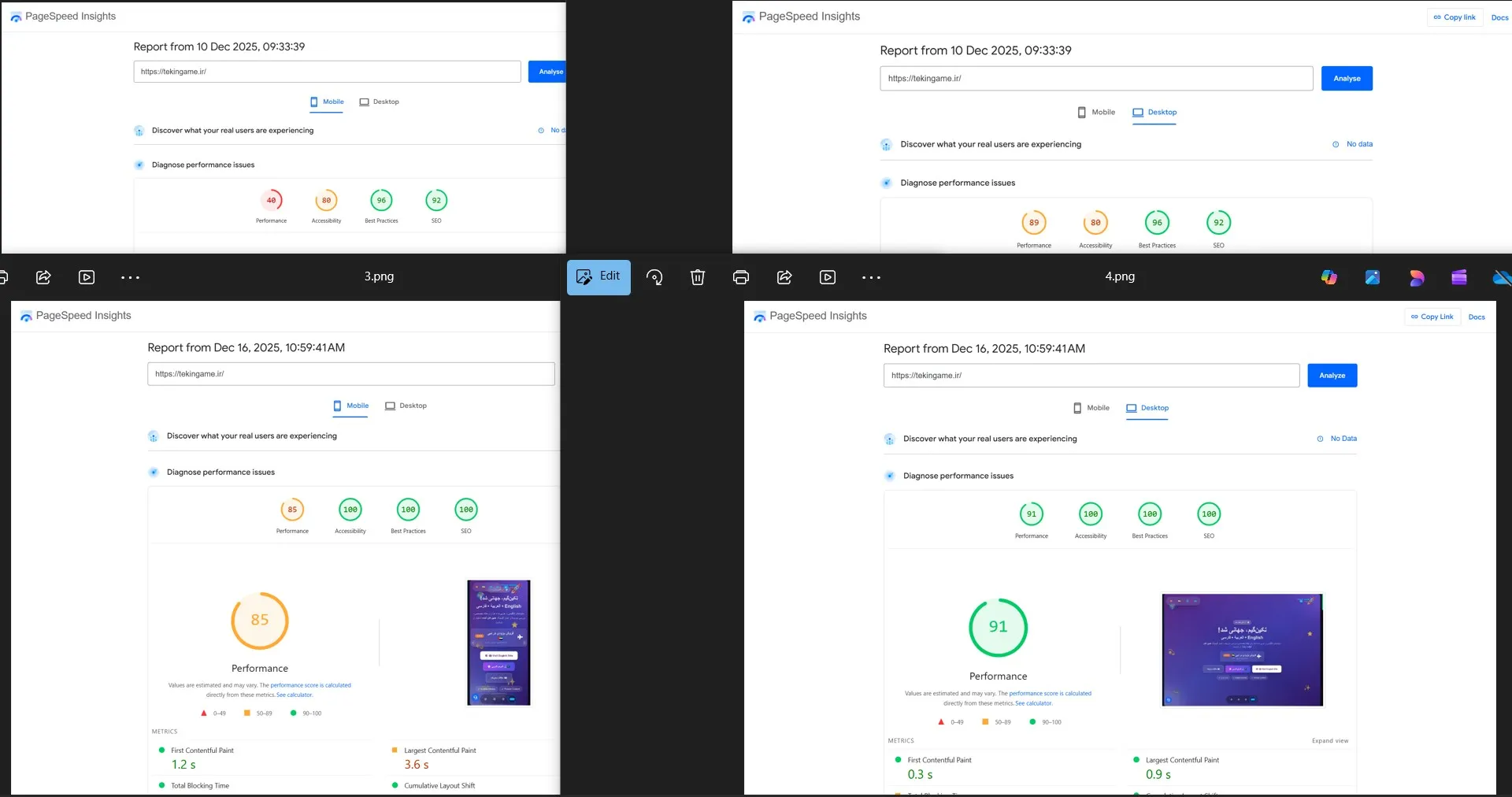

Today, I want to pull back the curtain and share a truth that is somewhat painful to admit, yet incredibly educational. Until very recently, if you had run "Tekin Game" through Google’s testing tools (PageSpeed Insights), you would have been met with a bloody scene.

We were seeing scores in the 40s and 50s. We saw Red and Orange warnings everywhere. Technical errors, heavy code, and layout shifts were acting like a handbrake, stopping our site from reaching its full potential.

We had great content, but "under the hood," the engine was struggling.

What was the solution? Should we hire a massive team of senior developers and spend thousands of dollars? Or should we trust the technology of the future? I chose the second path. I decided to tear down our code and rebuild it using the most advanced AI tools available—tools that many in the general public don't even know exist yet.

2. The Reality Check: The Myth of the "Permanent 100"

Before we dive into the tools and the code, I need to clarify a crucial technical point. In the screenshots we share, you will see scores of 100. But does this mean the site speed is always 100, every single second of the day?

The short answer is: No. And anyone who promises you a "Permanent 100" is lying to you.

2.1. Why Do Scores Fluctuate?

Google PageSpeed Insights is a simulation. Every time you click "Analyze," Google spins up a virtual machine in a different data center (it could be in the US, Europe, or Asia). It then throttles the network to simulate a slow 4G connection.

Because of network latency, server response times (TTFB), and the physical distance between the test server and our host, scores naturally fluctuate.

You might run a test now and get a 98. Two minutes later, you might get a 92. A third time might hit 100.

This fluctuation is normal. Our goal in this project was not to chase the vanity metric of a "Perfect 100" (which is just a number). Our goal was to "Escape the Red Zone" and achieve "Stability in the Green Zone" (consistently above 90). That is where the AI performed miracles.

2.2. Green is the Goal

The difference between a score of 48 (Red) and 90 (Green) is the difference between a user leaving your site in frustration and a user staying to read your content. Once you are in the Green Zone, the user experience is excellent. That is the victory we are celebrating today.

3. Meet the Digital Surgery Team

To accomplish this "Mission Impossible," I didn't code alone. I employed an "Invisible Army" of AI agents that acted as my Senior Engineering Team.

3.1. Trae: The Assistant That Reads Minds

Our primary weapon was the powerful code editor, Trae.

What makes Trae different from standard autocomplete is that it understands "Context." It reads the entire project structure. When I wanted to optimize a section of the website header, Trae didn't just look at that one file. It warned me: "Majid, if you change this CSS class, you might break the mobile menu in the footer."

This level of contextual awareness prevented regressions and sped up our workflow by 10x.

3.2. Claude 4.5 Opus: The Logic Architect

For the complex, heavy lifting, I turned to the "Final Boss" of language models: Claude Opus 4.5.

I would feed it our legacy, "spaghetti" JavaScript code and say: "Pretend you are a Senior Google Performance Engineer. Rewrite this logic so it achieves the same result, but consumes 50% less memory."

The results were astonishing. Claude refactored complex functions into clean, modern, and highly efficient code that the browser could execute instantly.

3.3. Amazon Kiro & Antigravity

Finally, we used specialized tools like Amazon Kiro and Google's Antigravity for debugging.

When PageSpeed Insights complained that "Largest Contentful Paint (LCP) is too slow," it didn't say why. Kiro acted as a detective. It pinpointed the exact line of code and the specific image that was blocking the render thread, offering a solution before I even finished reading the error log.

4. Operation Report: How We Saved the Site

Let's look at the tangible results of this human-AI collaboration (refer to the Before & After image):

4.1. Performance: The War on Bloat

This was the critical battlefield. With AI assistance, we:

- Identified and purged Unused JavaScript that had been rotting in the codebase.

- Automated the conversion of all assets to next-gen formats like WebP and AVIF.

- Restructured the loading priority so that the "First Contentful Paint" happens in under 0.8 seconds.

4.2. Accessibility: The Internet is for Everyone

Accessibility is not just about being nice; it's a core Google ranking factor. The AI acted as a "Blind User," scanning our DOM structure.

It pointed out where color contrast was too low for visually impaired users, where buttons lacked ARIA labels, and where navigation was confusing for screen readers. Fixing these issues pushed our Accessibility score to a perfect 100.

4.3. SEO & Best Practices: The Machine Language

We didn't just stuff keywords. We used Claude to write perfect Structured Data (Schema). Now, our site speaks Google's native language fluently, resulting in better indexing and those beautiful "Best Practices" scores.

5. The Future of Tekin Plus (Teaser)

Why am I revealing these trade secrets?

Because Tekin Plus is going to be more than just a news site. It is going to be a university.

I have a big plan. Soon, on our YouTube channel and in special sections of this site, I will release "Behind the Scenes" videos. I want to show you the custom Admin Panel I built entirely with these AI tools.

I want to teach you how YOU can use Trae, Kiro, and Claude to revolutionize your own projects. This article is just the appetizer.

6. The Verdict

Will AI replace programmers?

Our final verdict on the AI-Verdict page is this: AI will not replace humans. It will replace humans who refuse to use AI.

We didn't trick Google with these scores. We respected the web standards by utilizing the best technology available. These Green Lights are not just numbers; they are a symbol of a new era at Tekin Plus.

Yours truly, Majid - Tekin Game