Introduction: Hello Tekin Army!

Hello Tekin Army! While everyone is busy talking about AI’s impact on jobs, creativity, and GPUs, a quieter crisis is unfolding in the background: water.

AI doesn’t just burn electricity. Every time you send a prompt to a large language model, render an AI-generated image, or deploy a new inference-heavy feature in your app, somewhere on the planet, water is being evaporated in a cooling tower or consumed at a power plant.

By 2025, research and press reports estimate that AI-linked water consumption has climbed into the hundreds of billions of liters, with one high-profile estimate putting it around 765 billion liters per year, enough to surpass the global bottled water industry’s total water demand. That comparison is not just clickbait; it’s a signal that digital infrastructure has become a major water user on par with physical industries.

In this deep-dive we will unpack a central question: “AI water consumption: why are AI models now using more water than the entire bottled water industry?”

In this article, you’ll learn:

- Where exactly water is consumed in the AI pipeline: data centers vs power generation.

- How much water a single chat or LLM request actually represents, in milliliters or bottles.

- Why 2025’s AI water use is being compared to the entire bottled water sector and to the annual needs of countries and megacities.

- What Microsoft, Google, and AI companies are reporting about their own water footprints.

- What scenarios researchers are projecting for 2027–2030 if AI growth continues unchecked.

- Which technical and policy solutions could bend the curve, from efficient cooling to renewable-powered data centers.

From One Chat to One Bottle: How Much Water Does AI Really Drink?

To make this tangible, let’s start at the level of a single interaction.

Researchers and journalists looking at the physical footprint of AI have broken down water use into two layers:

- Direct data center water use: water used on-site for cooling servers, mainly via evaporative cooling towers.

- Indirect water use via electricity generation: water consumed at power plants that generate the electricity needed for AI workloads.

On the per-user side, the numbers are surprisingly concrete:

- One widely cited estimate suggests that every 10–50 prompts sent to a large AI chatbot correspond to around 500 milliliters of water consumed, roughly a small bottle of drinking water.

- Other analyses estimate that each individual AI request is associated with between 0.26 milliliters and 39 milliliters of water use, depending on model efficiency, data center design, and the local power mix.

- According to reported company figures, AI operated by OpenAI is associated with around 97.5 million liters of water per day, while Google’s AI operations consume on the order of 650,000 liters per day.

If you convert 97.5 million liters into 500 ml bottles, you get about 195 million bottles’ worth of water per day linked to AI workloads at just one major provider. That’s the daily scale we’re talking about.

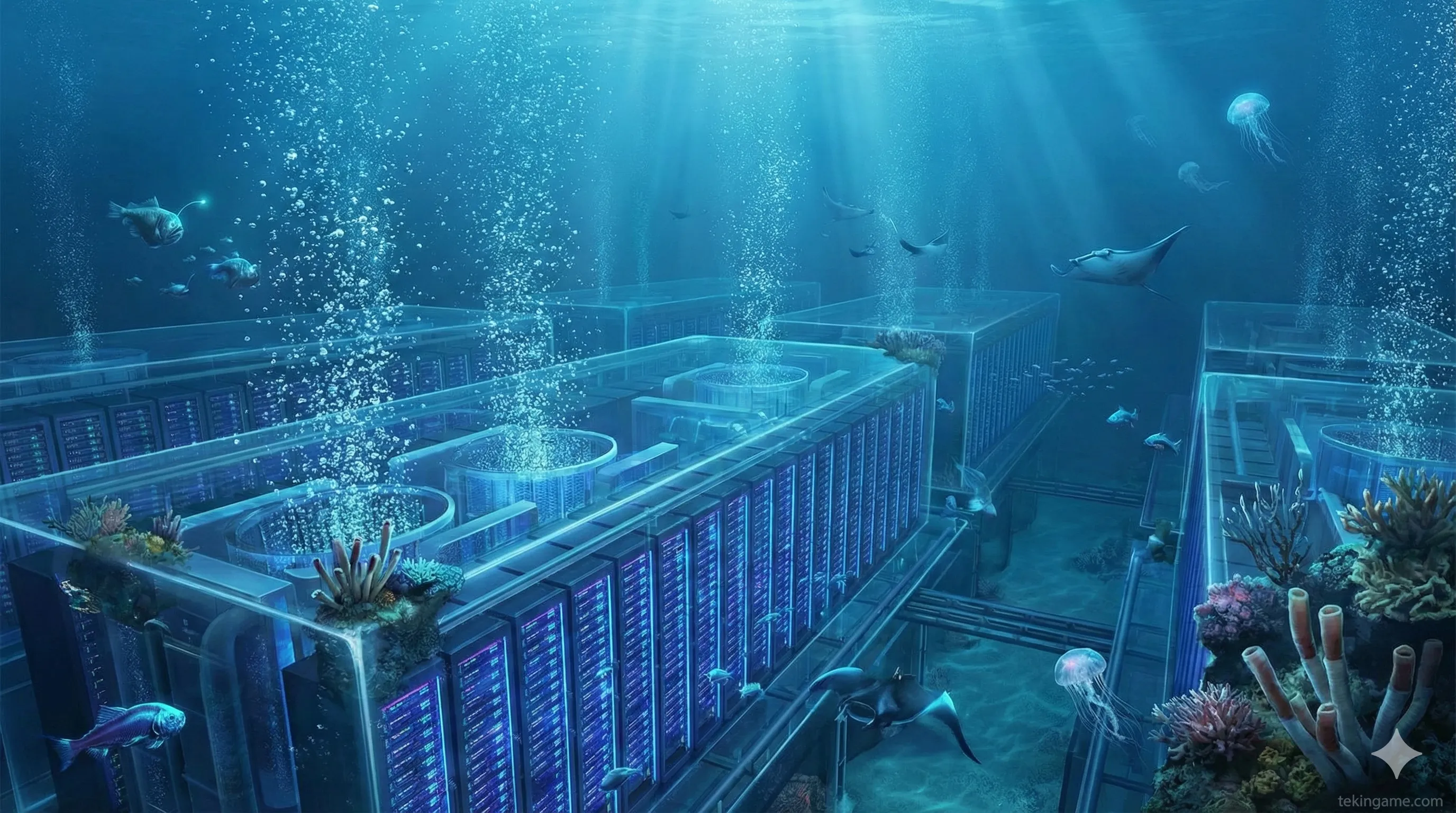

The Hidden Thirst of Data Centers: Cooling Towers and Power Plants

The core of the problem lies in how digital infrastructure turns computation into heat—and then into evaporated water.

1. Cooling towers: where water literally disappears into thin air

Modern AI models run in hyperscale data centers filled with thousands of GPUs or specialized accelerators. These chips run hot, especially during training runs, and that heat must be removed continuously.

Many data centers use evaporative cooling towers to generate cold water for their cooling loops. The mechanism is simple physics:

- Warm water carrying heat from servers is sent to a tower.

- A fraction of that water is evaporated, carrying away the heat.

- The remaining water is cooled and recirculated.

The catch:

- In hot, dry climates with low humidity, evaporation—and thus water consumption—spikes dramatically in summer.

- The heavier the AI compute load, the more heat must be removed, and the more water is evaporated.

2. “Off-site” water use: the power plant factor

Even if a data center minimizes or eliminates on-site water for cooling (e.g., via air cooling or direct liquid cooling with low evaporation), there is still the water used upstream to generate the electricity it consumes.

In many countries, large fractions of electricity come from thermoelectric plants (coal, gas, nuclear) and some types of hydro, all of which rely on significant volumes of water for cooling and process operations. Studies and policy reports consistently highlight electricity generation as one of the largest water-withdrawal sectors in national economies.

This means:

- Every extra watt-hour demanded by AI increases both direct and indirect water use.

- The true “water footprint” of AI is substantially larger than what data center site reports alone might suggest.

3. Explosive growth in AI workload

The data infrastructure context makes this more alarming:

- The data storage industry is growing at roughly 20% per year.

- The global AI market is projected to grow at around 40% per year.

With that kind of compounding, the electricity, cooling, and hence water requirements of AI could escalate much faster than traditional infrastructure can adapt.

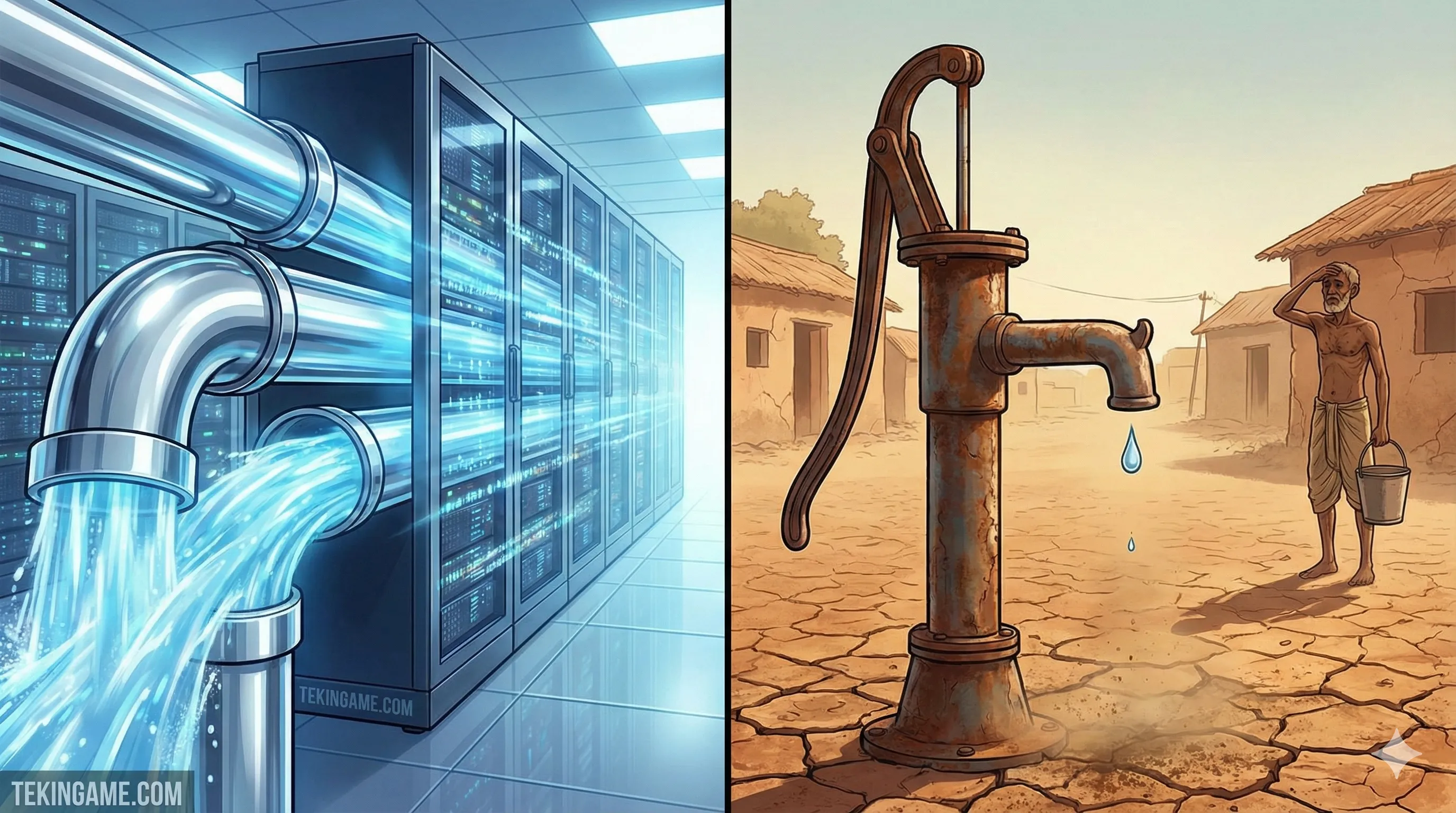

Why the Numbers Turned Shocking: AI vs Bottled Water and Cities

This is where the comparisons that caught headlines come in: AI vs all bottled water, and AI vs cities.

1. The 765-billion-liter milestone

New estimates suggest that AI-related water use has reached around 765 billion liters in a recent year. Analysts and journalists point out that this figure:

- Exceeds the total water use of the global bottled water industry, which itself is massive.

- Is greater than the global demand for bottled drinking water in some modeled scenarios.

In other words, if you imagine all the factories filling plastic water bottles worldwide, the AI ecosystem as a whole is now out-drinking them.

2. Comparing AI to national and urban water use

Research modeling future AI growth suggests that by around 2027, AI could require an additional 4.2–6.6 billion cubic meters of water withdrawals annually. For scale:

- That is more than the annual water withdrawals of roughly half of the United Kingdom.

- It is comparable to or greater than the annual water use of multiple large cities combined.

Once an ostensibly “virtual” technology starts showing up next to countries and megacities in water statistics, it clearly becomes a resource governance issue, not just a tech optimization problem.

3. Corporate numbers: Microsoft and Google as early indicators

Corporate sustainability reports reveal how fast things are changing:

- Microsoft disclosed that its global water consumption increased by about 35% between 2021 and 2022, reaching nearly 1.7 billion gallons (around 6.4 billion liters). That’s enough to fill over 2,500 Olympic-size swimming pools.

- Google reported about a 20% increase in its direct water use over the same period.

Both companies explicitly link a significant share of this surge to the rise of AI workloads in their data centers.

Inside Large Models: From Training Runs to Per-Prompt Milliliters

To understand why modern AI is becoming so water-intensive, we need to separate training from inference and look at algorithmic efficiency.

1. Training: the real water monster

Training frontier-scale models is where resource use peaks:

- Thousands of GPUs or TPUs run near full load for weeks or months.

- Data centers must continuously remove vast amounts of heat, driving up cooling demand and water evaporation.

- The electricity needed for these GPU farms typically comes partly from water-dependent power generation.

Academic analyses (outside the specific search results here) have estimated that training a single very large model can use water comparable to the daily consumption of a small city—depending on the data center location and cooling technology.

2. Inference: billions of “tiny” requests that add up

Inference workloads—serving users—are individually lighter but astronomically frequent:

- Each chat, API call, or autocomplete triggers a modest but non-zero amount of computation.

- As noted, estimates range from 0.26 ml to 39 ml of water per AI request when indirect power-related water use is included.

- At the scale of billions of daily requests, this becomes tens to hundreds of millions of liters per day across providers.

3. Algorithmic efficiency and model design

Another critical vector is efficiency—both at the model and system levels:

- More efficient architectures (e.g., better attention mechanisms, sparsity, distillation) can deliver similar or better performance using fewer FLOPs, reducing energy and water use per request.

- Using smaller, specialized models for narrow tasks instead of routing everything through a single giant general-purpose model can also significantly cut resource use.

- Improving AI algorithm efficiency directly lowers compute requirements, and thus indirectly reduces water consumption.

2027–2030 Scenarios: When AI’s Thirst Meets a Thirsty Planet

Researchers and infrastructure planners are sketching several plausible scenarios for the next five years.

1. The “business-as-usual” growth curve

If current trends continue without major intervention:

- AI-related water withdrawals could reach the 4.2–6.6 billion m³ range by ~2027.

- AI could rival entire industrial sectors in water use, compounding stress in already water-scarce regions.

- Localized hotspots—where data centers cluster around favorable tax, fiber, and energy conditions—may see direct competition between data centers, cities, and agriculture for water.

2. Water stress in dry regions

Placing hyperscale AI data centers in water-stressed areas is particularly risky:

- These regions already face tight margins between supply and demand due to climate change and overuse.

- Adding a large industrial-scale water consumer—especially one whose usage can spike rapidly with AI demand—heightens the risk of shortages and political backlash.

3. A mitigation scenario: what if we take this seriously?

On the optimistic side, there is a credible mitigation path if companies and regulators act proactively:

- Cooling optimization: shifting to more efficient systems, including advanced air cooling, hybrid cooling, or non-evaporative liquid cooling where climate allows, cutting direct water use substantially.

- Smarter siting: building new data centers in regions with abundant water and/or climates well-suited for low-water cooling, and away from already-stressed basins.

- Renewable energy: moving AI data centers onto solar and wind power reduces dependence on water-intensive thermoelectric generation.

- Algorithmic and hardware efficiency: continued progress on model efficiency and accelerator design can dramatically lower FLOPs per unit of useful work.

- Water treatment and reuse: using on-site treatment, greywater, or recycled sources instead of potable water for cooling processes.

Conclusion: Future Outlook

AI’s water footprint has crossed an important symbolic line: when your sector’s water use beats the entire bottled water industry and begins to rival national consumption figures, you are no longer an invisible background load—you are a major part of the global water story.

The key levers are now clear: where we build data centers, how we cool them, what powers them, and how efficient our models and algorithms are. Microsoft, Google and others have announced goals to significantly reduce net water impacts by 2030, focusing on better cooling, water reuse, and renewable-powered facilities.

For developers, users, and policymakers, the next step is to treat water as a first-class metric for AI infrastructure—alongside carbon, latency, and cost. Every prompt, every training run, and every rollout has a hidden water bill attached. The sooner we make that visible, the better our chances of keeping AI’s thirst compatible with a warming, drying planet.