1. Redefining Intelligence: SLM vs. LLM (Quality Over Quantity)

For years, the industry equated "Intelligence" with "Knowledge." We assumed that for an AI to be smart, it had to memorize the entire internet. Large Language Models (LLMs) like GPT-4 or Claude 3 Opus were like the Library of Congress—containing every book ever written. But what is the problem with a national library? It is massive, navigating it is slow, and you need special permission (internet access) to enter.

In 2026, the definition has shifted. Small Language Models (SLMs) are like specialized field handbooks. An SLM with 3 billion parameters might not know 17th-century French poetry, but it can summarize your emails, manage your calendar, and debug your code faster and more accurately than a giant model. The secret sauce is "Data Quality." Instead of feeding the model the entire "noisy" internet, engineers now train compact models on "textbook-quality," highly curated synthetic data. The result? A model that is 10x smaller but punchier, smarter, and hallucination-free for specific tasks.

2. The Latency & Energy Crisis: Why the Cloud Hit a Wall

Two insurmountable physical barriers forced Big Tech to slam the brakes on the "Bigger is Better" train: Speed and Power.

- The Speed Limit (Latency): In the fast-paced world of 2026, nobody wants to wait 3 seconds for a spinning loading wheel after asking Siri or Gemini a question. Cloud-based AI is bound by the speed of light and network congestion. If you are in a subway tunnel or on a plane, cloud AI is a brick. On-Device AI eliminates this. The response is instant because the "brain" is right there on the silicon.

- The Energy Cliff: By 2025, AI data centers were consuming more electricity than entire mid-sized nations. The environmental and financial costs became unsustainable. Shifting the processing load to the "Edge" (billions of user devices) distributes this energy cost. Now, your phone's battery pays the energy bill for your AI tasks, not a coal-fired power plant in Virginia. This is the only path to sustainable, "Green AI."

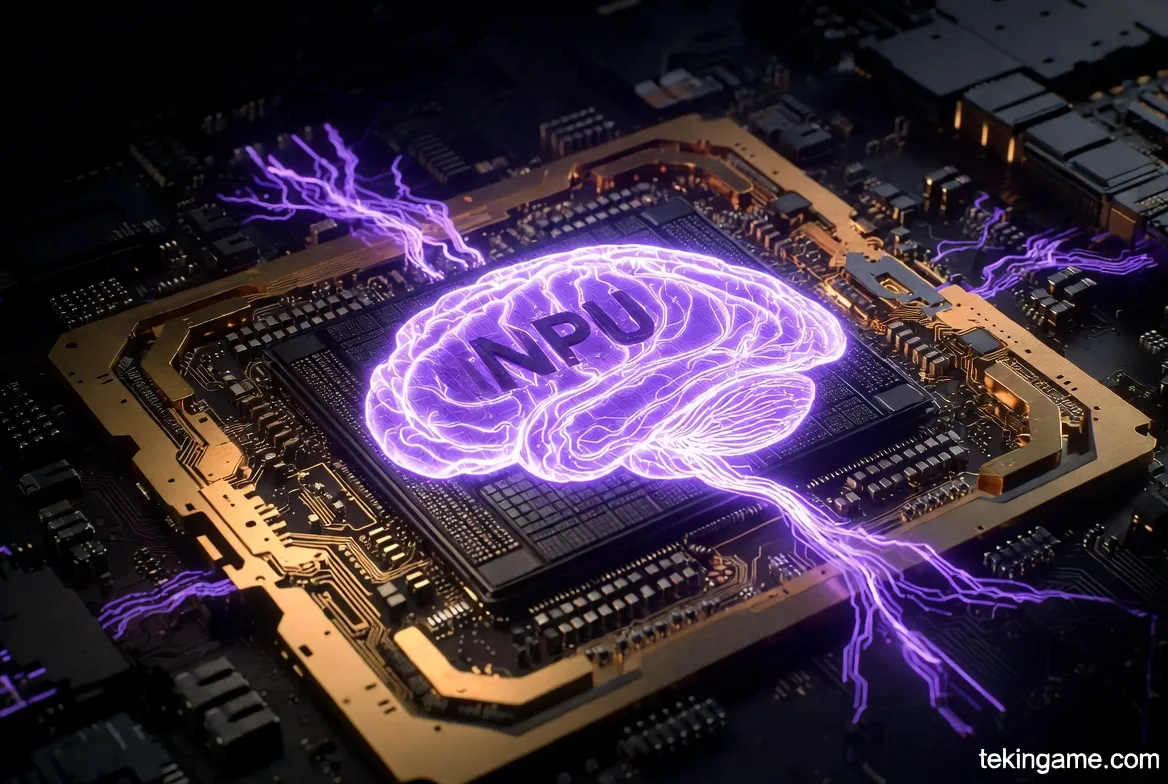

3. The Hardware War of 2026: The Rise of the NPU and AI PCs

Software is nothing without silicon. The SLM revolution owes its existence to a specific piece of hardware that is now as important as the CPU: the NPU (Neural Processing Unit).

Previously, the CPU and GPU did the heavy lifting. But in 2026, chips like the Snapdragon 8 Gen 5 and Apple's A19 Pro dedicate a massive portion of their die space to the NPU. These units are architecturally designed to solve the complex matrix mathematics of Transformers (the architecture behind AI) with extreme efficiency.

This gave birth to the "AI PC" standard. Laptops now come with dedicated "Copilot" keys and minimum hardware requirements (measured in TOPS - Trillions of Operations Per Second). If your device in 2026 cannot perform at least 45 TOPS locally, it is effectively obsolete. This hardware allows for real-time background processes—like live translation, noise cancellation, and contextual awareness—without draining the battery in an hour.

4. The Privacy Paradigm: My Data, My Device

Perhaps the biggest driver for the migration to small models was Fear. Fear from corporations and fear from consumers regarding data leakage.

When you ask a cloud-based AI to "Analyze this confidential PDF," you are uploading that document to someone else's server. In the corporate world, this is a nightmare. But with On-Device AI, the data never leaves the local environment. An SLM lives inside the phone's secure enclave, reads the PDF, and generates the summary locally.

This enables features like "Total Recall" or "AI Rewind"—where the OS records and indexes everything you see on your screen to make it searchable later. This feature would be a dystopian privacy disaster if it were cloud-based. But because it is processed locally on an encrypted chip, it becomes a powerful productivity tool. In 2026, Privacy isn't just a policy; it's a hardware architecture.

5. Meet the Titans of Tiny: From Gemini Nano to Phi-4

Let's introduce the star players of the SLM arena in 2026:

- Google Gemini Nano 3: The king of Android. Optimized to run on everything from the Pixel 10 to mid-range Samsungs. It handles the "Magic Compose" features in messages and real-time call screening.

- Microsoft Phi-4: Microsoft proved that "Small" doesn't mean "Stupid." The Phi series, trained on synthetic textbooks, demonstrates reasoning capabilities that rival GPT-3.5 but runs on a laptop without a dedicated GPU. It is the brain behind Windows 12's local features.

- Mistral Edge: The European open-source champion. Mistral provides developers with highly efficient models that can be embedded into apps, allowing for custom, offline AI experiences without paying API fees to OpenAI.

- Apple Intelligence (Local Foundation): Apple's approach uses a localized model of roughly 3-7 billion parameters that runs silently in the background, understanding personal context (who is your mom, what email did she send yesterday) without ever contacting a server.

6. The Developer's Frontier: Building Offline-First AI Apps

For software engineers, 2026 is a golden age. Frameworks like Apple's Core ML, Google's TensorFlow Lite, and ONNX Runtime have matured significantly.

A solo developer can now take an open-source model (like Llama 4), "Quantize" it (compress it from 16-bit to 4-bit precision to reduce size without losing much intelligence), and embed it directly into their app bundle.

The result? A photo editing app that uses generative fill, or a writing assistant that fixes grammar, all working perfectly in "Airplane Mode." This eliminates server costs for the developer and subscription fatigue for the user.

7. The Hybrid Future: Orchestrating the Cloud and the Edge

Does this mean the death of the massive Cloud LLM? Absolutely not. The future is Hybrid.

Imagine this workflow: You ask your phone, "Set an alarm for 7 AM."

The OS recognizes this is a simple task. The local SLM executes it instantly (Cheap, Fast, Private).

Then you ask, "Plan a 7-day itinerary for Kyoto based on 17th-century history."

The local SLM realizes this requires deep world knowledge. It hands off the query to the Cloud LLM (GPT-5 or Gemini Ultra). The heavy lifting is done in the cloud, and the result is sent back.

Operating Systems in 2026 act as "AI Traffic Controllers," intelligently routing tasks between the NPU in your pocket and the H100s in the cloud, balancing privacy, speed, and capability seamlessly.

Final Verdict: Small is Beautiful

We are transitioning from the era of "Awe" to the era of "Utility." AI is no longer just a magic chatbot in a web browser; it is an invisible layer painted over all our hardware. The shrinking of models has made AI more democratic, more private, and more sustainable.

In 2026, the power of a device isn't just defined by its CPU clock speed, but by the IQ of its NPU. And thankfully, that intelligence is now fully yours—sitting right there in the palm of your hand.

What is your take? Is offline privacy a dealbreaker for you, or do you prefer the raw power of cloud-based models? Let us know in the comments below.