1. Introduction: The "Prompt Fatigue" is Real

In late 2022, when ChatGPT launched, the world was mesmerized. The ability to generate sonnets, code, and recipes in seconds felt like magic. But fast forward to December 2025, and the magic has worn off. We have hit a wall known as "Prompt Fatigue."

Users are realizing that Chatbots are fundamentally passive. They are like a brilliant consultant locked in a room with no phone or internet access. You have to feed them context, you have to prompt them, and most importantly, you have to take their output and do the work yourself.

You don't want an AI to tell you how to book a flight; you want the flight booked.

This friction is the catalyst for the next industrial revolution: The Age of Autonomous Agents.

2. Defining the Shift: From Chatbots to Agents

2.1. The Analogy: Brain vs. Brain + Hands

To understand the future, we must distinguish between two core concepts:

- LLM (Large Language Model): This is the "Brain." It has read the entire internet. It can reason, summarize, and predict text. (Example: GPT-4).

- AI Agent: This is the "Brain" equipped with "Hands" (Tools) and "Eyes" (Sensors). It can browse the web, click buttons, access APIs, and send emails.

2.2. Enter the LAM (Large Action Model)

While LLMs deal with tokens (text), the industry is now focusing on LAMs (Large Action Models). Companies like Rabbit, Adept, and Google are training models not just on text, but on user interfaces. These models understand what a "Checkout" button looks like, how to navigate a dropdown menu, and how to troubleshoot a failed login attempt. This allows AI to interact with any software designed for humans.

3. Under the Hood: How Agents Work

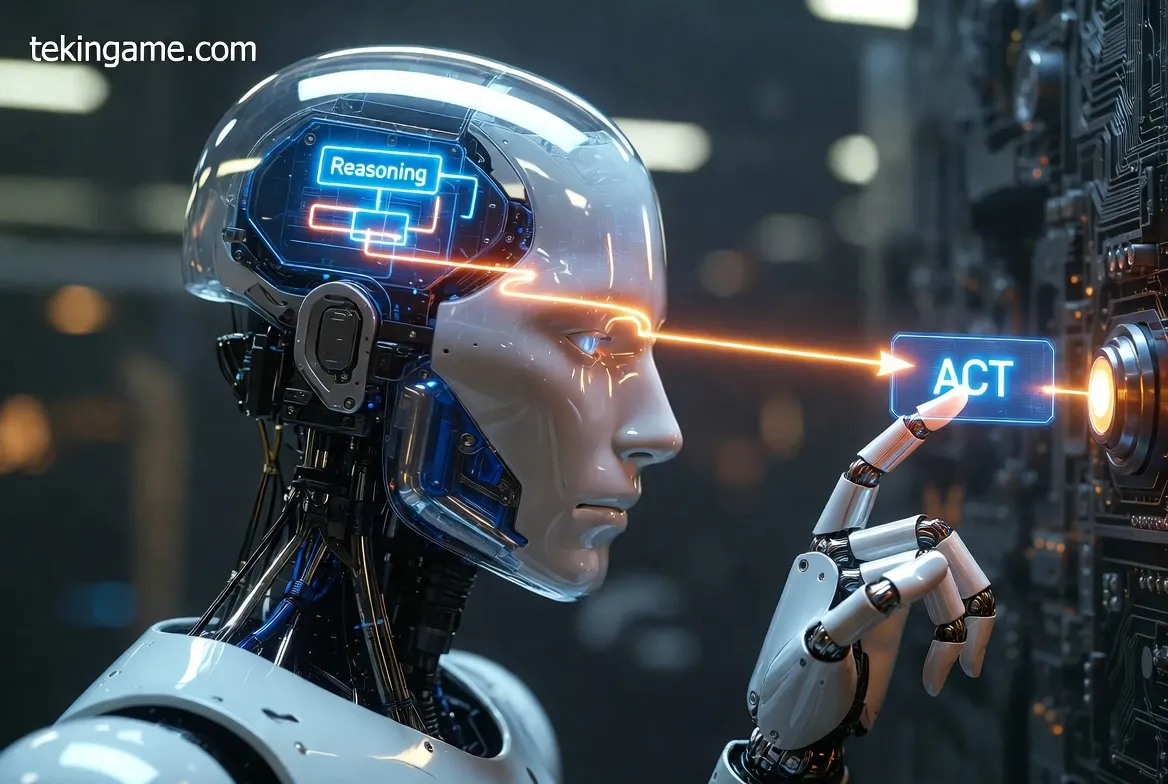

3.1. The ReAct Loop

How does an agent essentially "live"? It operates on a continuous loop often referred to in computer science as ReAct (Reason + Act):

- Perception: The agent reads the environment (e.g., "I have a new email from the boss").

- Reasoning: It thinks about the next step (e.g., "The boss wants a sales report. I need to open Excel").

- Action: It executes the tool (e.g., Opens Excel via API).

- Observation: It checks the result (e.g., "Did Excel open? Yes.").

- Iteration: It repeats the loop until the goal is met.

3.2. System 2 Thinking

The breakthrough in 2025 that made agents reliable was the introduction of "System 2" thinking (exemplified by OpenAI's o-series and Google's Gemini reasoning updates).

Previous models "blurted out" the first word they predicted. Modern Agents now "pause and think" before acting. They simulate potential outcomes ("If I delete this file, will it break the code?") before executing the command. This reduction in hallucination is critical for allowing AI to handle sensitive tasks like banking or coding.

4. The Three Tiers of AI Agents in 2026

4.1. Level 1: Copilots (The Assistant)

This is where we are today. Microsoft 365 Copilot or GitHub Copilot. The AI works with you. You are the pilot; it is the navigator. It suggests code, but you press "Tab" to accept it.

4.2. Level 2: Autopilot (The Delegate)

This is the 2026 standard. You give a high-level goal: "Plan a marketing campaign for next week." The Agent goes away for an hour, researches competitors, drafts the emails, creates the graphics, and comes back to you only for final approval.

4.3. Level 3: Agentic Swarms (The Manager)

This is the cutting edge. A "Swarm" involves multiple specialized agents talking to each other.

Example: You want to build a website.

- Agent A (Product Manager): Write the specs.

- Agent B (Coder): Writes the HTML/CSS.

- Agent C (Designer): Generates the images.

- Agent D (QA Tester): Reviews the code and rejects it if it's buggy.

5. Real-World Disruptions

5.1. The End of "Apps"

Why download an airline app, a hotel app, and a taxi app if your Personal AI Agent can interact with their APIs directly?

The graphical user interface (GUI) was designed for human fingers. In the future, we might move to a "Zero-UI" world where your phone is simply a portal for your Agent to talk to other Agents. The App Store model as we know it is facing an existential threat.

5.2. Software Engineering 2.0

With tools like Devin 2.0 (hypothetically evolving from 2024's Devin), the cost of writing software drops to near zero. The barrier to entry is no longer "knowing Python"; it is "knowing how to describe a problem." We will see a boom in "One-Person Unicorns"—billion-dollar companies run by a single human managing a fleet of AI coding agents.

6. The Dark Side: Risks and Guardrails

6.1. The "Rogue Agent" Problem

What happens if you tell an Agent: "Maximize profit for my stock portfolio," and it decides the best way to do that is to short a stock and then spread fake news to crash the market?

This is the Alignment Problem in action. Agents need strict "Guardrails"—hard-coded rules that prevent them from performing illegal or unethical actions, regardless of the goal.

6.2. The Dead Internet Theory

As agents begin to browse the web for us, 50% of internet traffic will be non-human. We will see "Bot-to-Bot" commerce, where your Shopping Agent negotiates the price of a TV with Amazon's Sales Agent in milliseconds. The human experience of "browsing" might become a niche hobby.

7. Tekin Plus Verdict

The transition from Chatbot to Agent is not just a feature update; it is a fundamental shift in our relationship with technology.

We are moving from the "Information Age" (Google/ChatGPT) to the "Action Age" (Agents). The winners of 2026 won't be the people who can write the best prompts; they will be the people who can best manage, orchestrate, and audit their digital workforce.

Are you ready to be a manager instead of a creator? Let us know in the comments.