1. Introduction: Sam Altman's Holiday Gift

Today is Thursday, December 11, 2025. While the gaming community is hyper-focused on the rumors surrounding The Game Awards and the cybersecurity sector is reeling from the morning's ransomware reports, OpenAI decided to hijack the news cycle with a signature "December Drop."

Without a flashy live event or a pre-announced keynote, a simple blog post appeared on their website titled: "Sora 2: Seeing, Hearing, and Creating."

The significance of this release cannot be overstated. If Sora 1 (unveiled nearly two years ago) was the AI equivalent of the Lumière brothers' first motion picture camera, Sora 2 is The Jazz Singer—the moment the medium learned to speak. We have officially exited the era of "Silent AI Video." Now, when you prompt the model to visualize a thunderstorm, you don't just see the lightning; you hear the crack of thunder and the relentless patter of rain on the pavement.

2. Under the Hood: The "Sonic-Sync" Engine

2.1. Simultaneous Generation

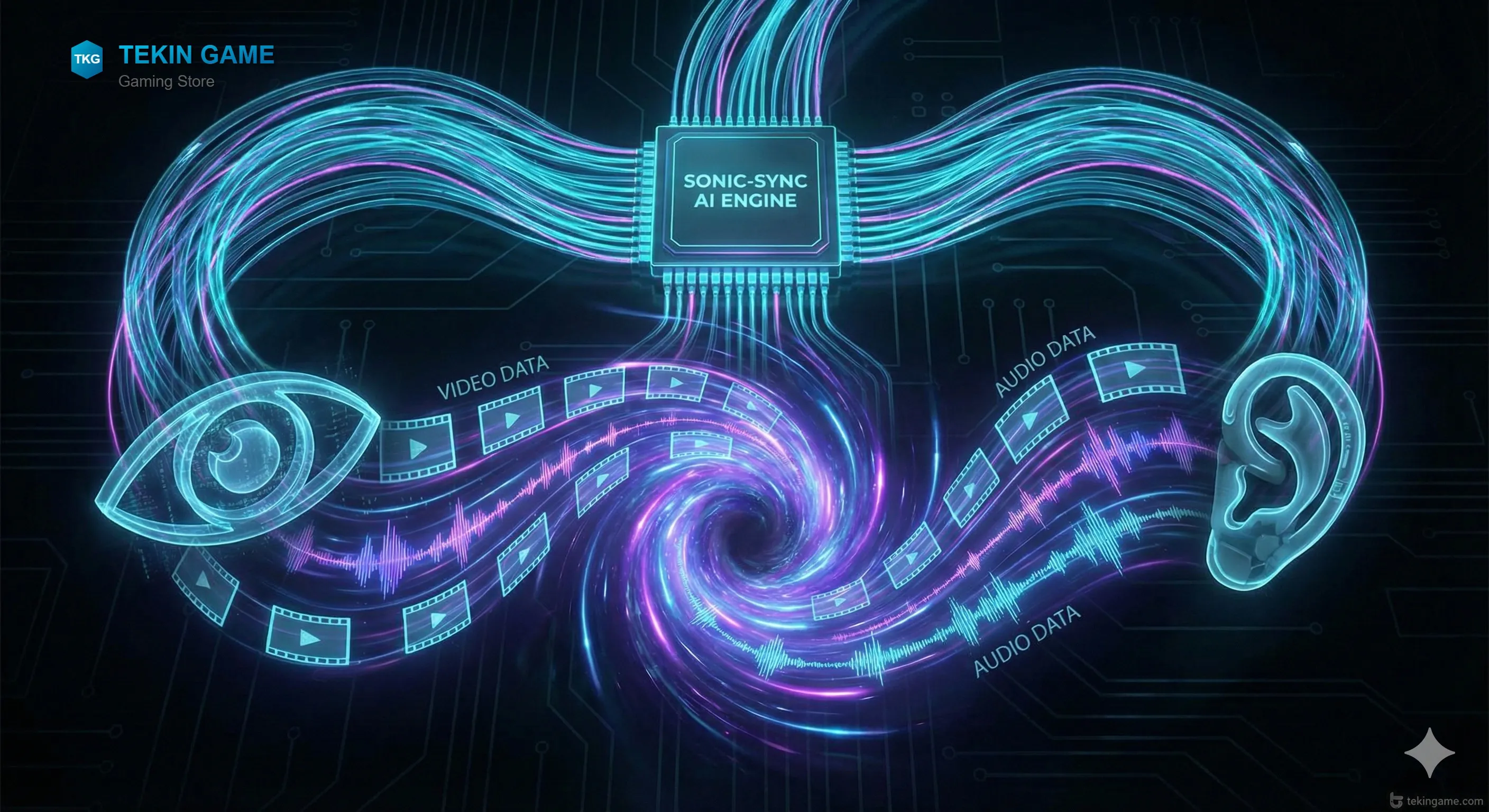

The crown jewel of Sora 2 is a new neural architecture OpenAI calls Sonic-Sync.

In previous workflows (using tools like Runway or Pika), creators had to generate video first, then use a separate tool (like ElevenLabs or Suno) to generate audio, and finally stitch them together in Premiere Pro. The results were often disjointed.

Sora 2 processes audio and video simultaneously in the same latent space. It understands the physics of sound:

- Material Awareness: The model knows that a leather boot walking on gravel sounds different than a sneaker on concrete.

- Spatial Audio: If a car drives from the left side of the frame to the right, the audio pans automatically. If the camera moves away from a sound source, the volume and reverb adjust to match the simulated distance.

2.2. The Death of the Uncanny Valley

One of the most jarring aspects of AI video has always been mouths. Characters would speak, but their lips would move randomly, breaking the immersion.

Sora 2 introduces Phoneme-Pixel Mapping. When you type dialogue (or upload a voice track), the model generates the video frame-by-frame to ensure the character's jaw, tongue, and lips move in perfect synchronization with the phonemes. In one demo, a generated character recites a fast-paced rap verse, and the lip-syncing holds up even in extreme close-ups.

2.3. Adaptive Musical Scores

Beyond sound effects (Foley), Sora 2 acts as a composer. But it doesn't just slap a generic Lo-Fi track on the video.

The music is context-aware. If the video prompt describes a "tense chase scene that ends in a romantic reunion," the generated score will start with aggressive, high-tempo percussion and seamlessly transition into swelling strings as the visual mood changes. The AI understands cinematic pacing, crescendo, and emotional beats.

3. The Demos: A Feast for the Senses

3.1. Scenario: "The Rainy Jazz Cafe"

To showcase the fidelity of the model, OpenAI released a 60-second clip titled "Midnight in Manhattan."

The Visuals: The camera pans through a smoky, 1950s-style jazz club.

The Audio: This is where jaws dropped. You hear the specific clink of crystal glasses in the background, the low murmur of indistinguishable conversation, and the squeak of a wooden chair. Then, a saxophone player begins a solo. The audio matches his finger movements perfectly. The sound is rich, layered, and distinct.

3.2. Scenario: "Sci-Fi Crash"

A second demo shows a futuristic racer crashing into a neon wall.

The audio demonstrates the Doppler Effect as the vehicle approaches the camera. The impact isn't a stock sound effect; it sounds like a complex crunch of carbon fiber and shattering glass. It creates a visceral reaction that silent video simply cannot achieve.

4. Disruption in the Creative Industries

4.1. Hollywood on Alert

Until yesterday, independent filmmakers using AI still needed human help for sound design. A silent AI video feels "fake." A video with professional sound feels "real."

Sora 2 threatens to automate the Foley Artist and Sound Engineer professions. If a prompt can generate "footsteps on snow" with studio-quality clarity, the need for recording libraries and manual syncing diminishes rapidly. Hollywood unions, which fought hard for protections last year, will likely convene emergency meetings regarding this new capability.

4.2. The "One-Person Studio"

For YouTubers, influencers, and storytellers, this is the Holy Grail. A single creator can now write a script, and have Sora 2 generate the visuals, the voice acting, the sound effects, and the musical score in one pass.

We expect to see an explosion of high-production-value content from solo creators in early 2026. The barrier to entry for filmmaking has just been obliterated.

5. Safety, Ethics, and the Deepfake Dilemma

5.1. The Ultimate Deepfake Machine?

The ability to generate perfect lip-syncing raises immediate red flags. If bad actors can generate a video of a world leader declaring war, with their actual voice and perfect lip movements, the potential for disinformation is catastrophic.

OpenAI has preemptively addressed this:

- C2PA Watermarking: Every frame of video and every second of audio contains invisible, cryptographic metadata verifying it as AI-generated.

- Safety Rails: The model refuses to generate the likeness or voices of public figures, politicians, or celebrities. (Though, as cybersecurity experts warned this morning, hackers will inevitably try to jailbreak these restrictions).

6. Availability & Pricing

6.1. Who Can Use It?

OpenAI is rolling out Sora 2 access starting today, but it is exclusive.

Currently, only ChatGPT Pro users ($200/month tier) and select enterprise partners in the film industry have access to the Alpha build.

Plus users will likely have to wait until Q1 2026. The reason? Compute costs. Rendering high-definition video and high-fidelity audio simultaneously requires an immense amount of GPU inference power, making it too expensive to release to the masses immediately.

7. Tekin Plus Verdict

December 11, 2025, will be remembered as the day AI found its voice.

Sora 2 is not just an iterative update; it is a completion of the sensory loop. We now have a machine that can dream in sight and sound. While the creative possibilities are endless, the line between reality and simulation has never been blurrier.

Are we ready for a world where we cannot trust our eyes or our ears?

Let us know your thoughts in the comments below.